Appendix F Hints and Solutions to Selected Exercises

For the most part, solutions are provided here for odd-numbered exercises.

1 Set Theory

1.1 Set Notation and Relations

1.1.3 Exercises for Section 1.1

1.1.3.3.

1.1.3.5.

1.1.3.7.

1.2 Basic Set Operations

1.2.4 Exercises

1.2.4.1.

Answer.

-

\(\displaystyle \{2,3\}\)

-

\(\displaystyle \{0,2,3\}\)

-

\(\displaystyle \{0,2,3\}\)

-

\(\displaystyle \{0,1,2,3,5,9\}\)

-

\(\displaystyle \{0\}\)

-

\(\displaystyle \emptyset\)

-

\(\displaystyle \{ 1,4,5,6,7,8,9\}\)

-

\(\displaystyle \{0,2,3,4,6,7,8\}\)

-

\(\displaystyle \emptyset\)

-

\(\displaystyle \{0\}\)

1.2.4.3.

1.2.4.5.

1.2.4.7.

1.3 Cartesian Products and Power Sets

1.3.4 Exercises

1.3.4.1.

Answer.

-

\(\displaystyle \{(0, 2), (0, 3), (2, 2), (2, 3), (3, 2), (3, 3)\}\)

-

\(\displaystyle \{(2, 0), (2, 2), (2, 3), (3, 0), (3, 2), (3, 3)\}\)

-

\(\displaystyle \{(0, 2, 1), (0, 2, 4), (0, 3, 1), (0, 3, 4), (2, 2, 1), (2, 2, 4),\\ (2, 3, 1), (2, 3, 4), (3, 2, 1), (3, 2, 4), (3, 3, 1), (3, 3, 4)\}\)

-

\(\displaystyle \emptyset\)

-

\(\displaystyle \{(0, 1), (0, 4), (2, 1), (2, 4), (3, 1), (3, 4)\}\)

-

\(\displaystyle \{(2, 2), (2, 3), (3, 2), (3, 3)\}\)

-

\(\displaystyle \{(2, 2, 2), (2, 2, 3), (2, 3, 2), (2, 3, 3), (3, 2, 2), (3, 2, 3), (3, 3, 2), (3, 3, 3)\}\)

-

\(\displaystyle \{(2, \emptyset ), (2, \{2\}), (2, \{3\}), (2, \{2, 3\}), (3, \emptyset ), (3, \{2\}), (3, \{3\}), (3, \{2, 3\})\}\)

1.3.4.3.

1.3.4.5.

1.3.4.7.

1.3.4.9.

1.4 Binary Representation of Positive Integers

1.4.3 Exercises

1.4.3.1.

1.4.3.3.

1.4.3.5.

Answer.

There is a bit for each power of 2 up to the largest one needed to represent an integer, and you start counting with the zeroth power. For example, 2017 is between \(2^{10}=1024\) and \(2^{11}=2048\text{,}\) and so the largest power needed is \(2^{10}\text{.}\) Therefore there are \(11\) bits in binary 2017.

1.4.3.7.

1.5 Summation Notation and Generalizations

1.5.3 Exercises

1.5.3.1.

1.5.3.3.

Answer.

-

\(\displaystyle \frac{1}{1 (1+1)}+\frac{1}{2 (2+1)}+\frac{1}{3 (3+1)}+\cdots +\frac{1}{n(n+1)}=\frac{n}{n+1}\)

-

\(\displaystyle \frac{1}{1(2)}+\frac{1}{2(3)}+\frac{1}{3(4)}=\frac{1}{2}+\frac{1}{6}+\frac{1}{12}=\frac{3}{4}=\frac{3}{3+1}\)

-

\(1+2^3+3^3+\cdots +n^3=\left(\frac{1}{4}\right)n^2(n+1)^2\) \(\quad 1+8+27=36 = \left(\frac{1}{4}\right)(3)^2(3+1)^2\)

1.5.3.5.

1.5.3.7.

1.5.3.9.

2 Combinatorics

2.1 Basic Counting Techniques - The Rule of Products

2.1.3 Exercises

2.1.3.1.

2.1.3.3.

2.1.3.5.

2.1.3.7.

2.1.3.9.

2.1.3.11.

2.1.3.13.

2.1.3.15.

2.1.3.17.

Answer.

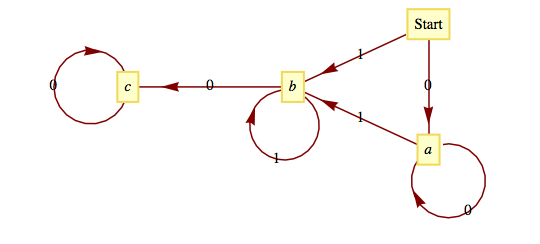

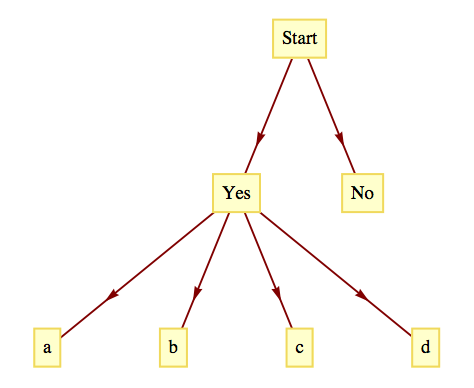

solution to exercise 17a of section 2.1. From a start node, there are two branches. The first branch, labeled yes, has four branches coming from it, one for each of the possible follow-up responses. The second branch from start is an end branch labeled no.

2.1.3.19.

2.2 Permutations

2.2.2 Exercises

2.2.2.1.

2.2.2.3.

2.2.2.5.

2.2.2.7.

2.2.2.9.

2.2.2.11.

2.3 Partitions of Sets and the Law of Addition

2.3.3 Exercises

2.3.3.1.

2.3.3.3.

2.3.3.5.

2.3.3.7.

2.3.3.9.

Solution.

We assume that \(\lvert A_1 \cup A_2 \rvert = \lvert A_1 \rvert +\lvert A_2\rvert -\lvert A_1\cap A_2\rvert \text{.}\)

\begin{equation*}

\begin{split}

\lvert A_1 \cup A_2\cup A_3 \rvert & =\lvert (A_1\cup A_2) \cup A_3 \rvert \quad Why?\\

& = \lvert A_1\cup A_2\rvert +\lvert A_3 \rvert -\lvert (A_1\cup A_2)\cap A_3\rvert \quad Why? \\

& =\lvert (A_1\cup A_2\rvert +\lvert A_3\rvert -\lvert (A_1\cap A_3)\cup (A_2\cap A_3)\rvert \quad Why?\\

& =\lvert A_1\rvert +\lvert A_2\rvert -\lvert A_1\cap A_2\rvert +\lvert A_3\rvert \\

& \quad -(\lvert A_1\cap A_3\rvert +\lvert A_2\cap A_3\rvert -\lvert (A_1\cap A_3)\cap (A_2\cap A_3)\rvert\quad Why?\\

& =\lvert A_1\rvert +\lvert A_2\rvert +\lvert A_3\rvert -\lvert A_1\cap A_2\rvert -\lvert A_1\cap A_3\rvert\\

& \quad -\lvert A_2\cap A_3\rvert +\lvert A_1\cap A_2\cap A_3\rvert \quad Why?

\end{split}

\end{equation*}

The law for four sets is

\begin{equation*}

\begin{split}

\lvert A_1\cup A_2\cup A_3\cup A_4\rvert & =\lvert A_1\rvert +\lvert A_2\rvert +\lvert A_3\rvert +\lvert A_4\rvert\\

& \quad -\lvert A_1\cap A_2\rvert -\lvert A_1\cap A_3\rvert -\lvert A_1\cap A_4\rvert \\

& \quad \quad -\lvert A_2\cap A_3\rvert -\lvert A_2\cap A_4\rvert -\lvert A_3\cap A_4\rvert \\

& \quad +\lvert A_1\cap A_2\cap A_3\rvert +\lvert A_1\cap A_2\cap A_4\rvert \\

& \quad \quad +\lvert A_1\cap A_3\cap A_4\rvert +\lvert A_2\cap A_3\cap A_4\rvert \\

& \quad -\lvert A_1\cap A_2\cap A_3\cap A_4\rvert

\end{split}

\end{equation*}

Derivation:

\begin{equation*}

\begin{split}

\lvert A_1\cup A_2\cup A_3\cup A_4\rvert & = \lvert (A_1\cup A_2\cup A_3)\cup A_4\rvert \\

& = \lvert (A_1\cup A_2\cup A_3\rvert +\lvert A_4\rvert -\lvert (A_1\cup A_2\cup A_3)\cap A_4\rvert\\

& = \lvert (A_1\cup A_2\cup A_3\rvert +\lvert A_4\rvert \\

& \quad -\lvert (A_1\cap A_4)\cup (A_2\cap A_4)\cup (A_3\cap A_4)\rvert \\

& = \lvert A_1\rvert +\lvert A_2\rvert +\lvert A_3\rvert -\lvert A_1\cap A_2\rvert -\lvert A_1\cap A_3\rvert \\

& \quad -\lvert A_2\cap A_3\rvert +\lvert A_1\cap A_2\cap A_3\rvert +\lvert A_4\rvert -\lvert A_1\cap A_4\rvert \\

& \quad+\lvert A_2\cap A_4\rvert +\lvert A_3\cap A_4\rvert -\lvert (A_1\cap A_4)\cap (A_2\cap A_4)\rvert \\

& \quad -\lvert (A_1\cap A_4)\cap (A_3\cap A_4)\rvert -\lvert (A_2\cap A_4)\cap (A_3\cap A_4)\rvert \\

& \quad +\lvert (A_1\cap A_4)\cap (A_2\cap A_4)\cap (A_3\cap A_4)\rvert \\

& =\lvert A_1\rvert +\lvert A_2\rvert +\lvert A_3\rvert +\lvert A_4\rvert -\lvert A_1\cap A_2\rvert -\lvert A_1\cap A_3\rvert \\

& \quad -\lvert A_2\cap A_3\rvert -\lvert A_1\cap A_4\rvert -\lvert A_2\cap A_4\rvert \quad -\lvert A_3\cap A_4\rvert \\

& \quad +\lvert A_1\cap A_2\cap A_3\rvert +\lvert A_1\cap A_2\cap A_4\rvert \\

& \quad +\lvert A_1\cap A_3\cap A_4\rvert +\lvert A_2\cap A_3\cap A_4\rvert \\

& \quad -\lvert A_1\cap A_2 \cap A_3\cap A_4\rvert

\end{split}

\end{equation*}

2.3.3.11.

Answer.

2.4 Combinations and the Binomial Theorem

2.4.4 Exercises

2.4.4.1.

2.4.4.2.

2.4.4.3.

2.4.4.5.

Answer.

Each path can be described as a sequence or R’s and U’s with exactly six of each. The six positions in which R’s could be placed can be selected from the twelve positions in the sequence \(\binom{12}{6} =924\) ways. We can generalize this logic and see that there are \(\binom{m+n}{m}\) paths from \((0,0)\) to \((m,n)\text{.}\)

2.4.4.7.

2.4.4.9.

2.4.4.11.

2.4.4.13.

2.4.4.15.

Answer.

Assume \(\lvert A \rvert =n\text{.}\) If we let \(x=y=1\) in the Binomial Theorem, we obtain \(2^n=\binom{n}{0}+\binom{n}{1}+\cdots +\binom{n}{n}\text{,}\) with the right side of the equality counting all subsets of \(A\) containing \(0, 1, 2, \dots , n\) elements. Hence \(\lvert P(A)\rvert =2^{\lvert A \rvert}\)

2.4.4.17.

3 Logic

3.1 Propositions and Logical Operators

3.1.3 Exercises

3.1.3.1.

3.1.3.3.

Answer.

-

\(2>5\) and 8 is an even integer. False.

-

If \(2\leqslant 5\) then 8 is an even integer. True.

-

If \(2\leqslant 5\) and 8 is an even integer then 11 is a prime number. True.

-

If \(2\leqslant 5\) then either 8 is an even integer or 11 is not a prime number. True.

-

If \(2\leqslant 5\) then either 8 is an odd integer or 11 is not a prime number. False.

-

If 8 is not an even integer then \(2>5\text{.}\) True.

3.1.3.5.

3.2 Truth Tables and Propositions Generated by a Set

3.2.4 Exercises

3.2.4.1.

Answer.

-

\(\displaystyle \begin{array}{cc} p & p\lor p \\ \hline 0 & 0 \\ 1 & 1 \\ \end{array}\)

-

\(\displaystyle \begin{array}{ccc} p & \neg p & p\land (\neg p) \\ \hline 0 & 1 & 0 \\ 1 & 0 & 0 \\ \end{array}\)

-

\(\displaystyle \begin{array}{ccc} p & \neg p & p\lor (\neg p) \\ \hline 0 & 1 & 1 \\ 1 & 0 & 1 \\ \end{array}\)

-

\(\displaystyle \begin{array}{cc} p & p\land p \\ \hline 0 & 0 \\ 1 & 1 \\ \end{array}\)

3.2.4.3.

3.2.4.5.

3.3 Equivalence and Implication

3.3.5 Exercises

3.3.5.1.

3.3.5.3.

Solution.

No. In symbolic form the question is: Is \((p\to q)\Leftrightarrow (q\to p)\text{?}\) \(\begin{array}{ccccc}

p & q & p\to q & q\to p & (p\to q)\leftrightarrow (q\to p) \\

\hline

0 & 0 & 1 & 1 & 1\\

0 & 1 & 1 & 0 & 0\\

1 & 0 & 0 & 1 & 0 \\

1 & 1 & 1 & 1 & 1 \\

\end{array}\)

This table indicates that an implication is not always equivalent to its converse.

3.3.5.5.

3.3.5.7.

3.3.5.9.

Solution.

Yes. In symbolic form the question is whether, if we have a conditional proposition \(p \to q\text{,}\) is \((q\to p)\Leftrightarrow (\neg p\to \neg q)\text{?}\)

\(\begin{array}{ccccc}

p & q & q\to p & \neg p\to \neg q & (q \to p)\leftrightarrow (\neg p\to \neg q) \\

\hline

0 & 0 & 1 & 1 & 1\\

0 & 1 & 0 & 0 & 1\\

1 & 0 & 1 & 1 & 1 \\

1 & 1 & 1 & 1 & 1 \\

\end{array}\)

This table indicates that an converse is always equivalent to the inverse.

3.4 The Laws of Logic

3.4.2 Exercises

3.4.2.1.

Answer.

Let \(s=\textrm{I will study}\text{,}\)\(t=\textrm{I will learn.}\) The argument is: \(((s\to t)\land (\neg t))\to (\neg s) ,\) call the argument \(a\text{.}\)

\begin{equation*}

\begin{array}{ccccc}

s\text{ } & t\text{ } & s\to t\text{ } & (s\to t)\land (\neg t)\text{ } & a \\

\hline

0\text{ } & 0\text{ } & 1\text{ } & 1\text{ } & 1 \\

0\text{ } & 1\text{ } & 1\text{ } & 0\text{ } & 1 \\

1\text{ } & 0\text{ } & 0\text{ } & 0\text{ } & 1 \\

1\text{ } & 1\text{ } & 1\text{ } & 0\text{ } & 1 \\

\end{array}\text{.}

\end{equation*}

Since \(a\) is a tautology, the argument is valid.

3.4.2.3.

3.5 Mathematical Systems and Proofs

3.5.4 Exercises

3.5.4.1.

Answer.

-

\begin{equation*} \begin{array}{cccc} p & q & (p\lor q)\land \neg q & ((p\lor q)\land \neg q)\to p \\ 0 & 0 & 0 & 1 \\ 0 & 1 & 0 & 1 \\ 1 & 0 & 1 & 1 \\ 1 & 1 & 0 & 1 \\ \end{array} \end{equation*}

-

\begin{equation*} \begin{array}{ccccc} p & q & (p\to q)\land \neg q & \neg p & ((p\to q)\land (\neg q))\rightarrow \neg p \\ 0 & 0 & 1 & 1 & 1 \\ 0 & 1 & 0 & 1 & 1 \\ 1 & 0 & 0 & 0 & 1 \\ 1 & 1 & 0 & 0 & 1 \\ \end{array} \end{equation*}

3.5.4.3.

Answer.

-

Direct proof:

-

\(\displaystyle d\to (a\lor c)\)

-

\(\displaystyle d\)

-

\(\displaystyle a\lor c\)

-

\(\displaystyle a\to b\)

-

\(\displaystyle \neg a \lor b\)

-

\(\displaystyle c\to b\)

-

\(\displaystyle \neg c\lor b\)

-

\(\displaystyle (\neg a\lor b)\land (\neg c\lor b)\)

-

\(\displaystyle (\neg a\land \neg c) \lor b\)

-

\(\displaystyle \neg (a\lor c)\lor b\)

Indirect proof:-

\(\neg b\quad \) Negated conclusion

-

\(a\to b\quad \) Premise

-

\(\neg a\quad \) Indirect Reasoning (1), (2)

-

\(c\to b\quad \) Premise

-

\(\neg c\quad \) Indirect Reasoning (1), (4)

-

\((\neg a\land \neg c)\quad \) Conjunctive (3), (5)

-

\(\neg (a\lor c)\quad \) DeMorgan’s law (6)

-

\(d\to (a\lor c)\quad \) Premise

-

\(\neg d\quad \) Indirect Reasoning (7), (8)

-

\(d\quad \) Premise

-

-

Direct proof:

-

\(\displaystyle (p\to q)\land (r\to s)\)

-

\(\displaystyle p\to q\)

-

\(\displaystyle (p\to t)\land (s\to u)\)

-

\(\displaystyle q\to t\)

-

\(\displaystyle p\to t\)

-

\(\displaystyle r\to s\)

-

\(\displaystyle s\to u\)

-

\(\displaystyle r\to u\)

-

\(\displaystyle p\to r\)

-

\(\displaystyle p\to u\)

-

\(\displaystyle \neg (t\land u)\to \neg p\)

-

\(\displaystyle \neg (t\land u)\)

Indirect proof:-

\(\displaystyle p\)

-

\(\displaystyle p\to q\)

-

\(\displaystyle q\)

-

\(\displaystyle q\to t\)

-

\(\displaystyle t\)

-

\(\displaystyle \neg (t\land u)\)

-

\(\displaystyle \neg t\lor \neg u\)

-

\(\displaystyle \neg u\)

-

\(\displaystyle s\to u\)

-

\(\displaystyle \neg s\)

-

\(\displaystyle r\to s\)

-

\(\displaystyle \neg r\)

-

\(\displaystyle p\to r\)

-

\(\displaystyle r\)

-

-

Direct proof:

-

\(\neg s\lor p\quad \) Premise

-

\(s\quad \) Added premise (conditional conclusion)

-

\(\neg (\neg s)\quad \) Involution (2)

-

\(p \quad \) Disjunctive simplification (1), (3)

-

\(p\to (q\to r)\quad \) Premise

-

\(q\to r\quad \) Detachment (4), (5)

-

\(q \quad\) Premise

Indirect proof:-

\(\neg (s\to r)\quad \) Negated conclusion

-

\(\neg (\neg s\lor r)\quad \) Conditional equivalence (1)

-

\(s\land \neg r\quad \) DeMorgan (2)

-

\(s\quad\) Conjunctive simplification (3)

-

\(\neg s\lor p\quad \) Premise

-

\(s\to p\quad\) Conditional equivalence (5)

-

\(p \quad\) Detachment (4), (6)

-

\(p\to (q\to r)\quad\) Premise

-

\(q\to r \quad\) Detachment (7), (8)

-

\(q\quad \) Premise

-

\(r\quad\) Detachment (9), (10)

-

\(\neg r \quad\) Conjunctive simplification (3)

-

-

Direct proof:

-

\(\displaystyle p\to q\)

-

\(\displaystyle q\to r\)

-

\(\displaystyle p\to r\)

-

\(\displaystyle p\lor r\)

-

\(\displaystyle \neg p\lor r\)

-

\(\displaystyle (p\lor r)\land (\neg p\lor r)\)

-

\(\displaystyle (p\land \neg p)\lor r\)

-

\(\displaystyle 0\lor r\)

Indirect proof:-

\(\neg r\) Negated conclusion

-

\(p\lor r\quad\) Premise

-

\(p\quad\) (1), (2)

-

\(p\to q\quad\) Premise

-

\(q \quad \) Detachment (3), (4)

-

\(q\to r\quad\) Premise

-

\(r \quad \)Detachment (5), (6)

-

3.5.4.5.

Answer.

-

Let \(W\) stand for “Wages will increase,” \(I\) stand for “there will be inflation,” and \(C\) stand for “cost of living will increase.” Therefore the argument is: \(W\to I,\text{ }\neg I\to \neg C,\text{ }W\Rightarrow C\text{.}\) The argument is invalid. The easiest way to see this is through a truth table, which has one case, the seventh, that this false. Let \(x\) be the conjunction of all premises. \(\begin{array}{ccccccccc} W & I & C & \neg I & \neg C & W\to I & \neg I\to \neg C & x & x\to C \\ \hline 0 & 0 & 0 & 1 & 1 & 1 & 1 & 0 & 1 \\ 0 & 0 & 1 & 1 & 0 & 1 & 0 & 0 & 1 \\ 0 & 1 & 0 & 0 & 1 & 1 & 1 & 0 & 1 \\ 0 & 1 & 1 & 0 & 0 & 1 & 1 & 0 & 1 \\ 1 & 0 & 0 & 1 & 1 & 0 & 1 & 0 & 1 \\ 1 & 0 & 1 & 1 & 0 & 0 & 0 & 0 & 1 \\ 1 & 1 & 0 & 0 & 1 & 1 & 1 & 1 & 0 \\ 1 & 1 & 1 & 0 & 0 & 1 & 1 & 1 & 1 \\ \end{array}\)

-

Let \(r\) stand for “the races are fixed,” \(c\) stand for “casinos are crooked,” \(t\) stand for “the tourist trade will decline,” and \(p\) stand for “the police will be happy.” Therefore, the argument is:\begin{equation*} (r\lor c)\to t, t\to p, \neg p\to \neg r\text{.} \end{equation*}The argument is valid. Proof:

-

\(t\to p\quad \) Premise

-

\(\neg p\quad \) Premise

-

\(\neg t\quad \) Indirect Reasoning (1), (2)

-

\((r\lor c)\to t\quad \) Premise

-

\(\neg (r\lor c)\quad \) Indirect Reasoning (3), (4)

-

\((\neg r)\land (\neg c)\quad \) DeMorgan (5)

-

3.5.4.7.

Answer.

\(p_1\to p_k\) and \(p_k\to p_{k+1}\) implies \(p_1\to p_{k+1}\text{.}\) It takes two steps to get to \(p_1\to p_{k+1}\) from \(p_1\to p_k\) This means it takes \(2(100-1)\) steps to get to \(p_1\to p_{100}\) (subtract 1 because \(p_1\to p_2\) is stated as a premise). A final step is needed to apply detachment to imply \(p_{100}\)

3.6 Propositions over a Universe

3.6.3 Exercises

3.6.3.1.

Answer.

-

\(\displaystyle \{\{1\},\{3\},\{1,3\},\emptyset\}\)

-

\(\displaystyle \{\{3\}, \{3,4\}, \{3,2\}, \{2,3,4\}\}\)

-

\(\displaystyle \{\{1\}, \{1,2\}, \{1,3\}, \{1,4\}, \{1,2,3\}, \{1,2,4\}, \{1,3,4\}, \{1,2,3,4\}\}\)

-

\(\displaystyle \{\emptyset, \{2\}, \{3\}, \{4\}, \{2,3\}, \{2,4\}, \{3,4\}\}\)

-

\(\displaystyle \{A\subseteq U:\left| A\right| =2\}\)

3.6.3.3.

3.6.3.5.

3.6.3.7.

3.7 Mathematical Induction

3.7.4 Exercises

3.7.4.1.

Answer.

We wish to prove that \(P(n):1+3+5+\cdots +(2n-1)=n^2\) is true for \(n \geqslant 1\text{.}\) Recall that the \(n\)th odd positive integer is \(2n - 1\text{.}\)

Induction: Assume that for some \(n\geqslant 1\text{,}\) \(P(n)\) is true. Then we infer that \(P(n+1)\) follows:

\begin{equation*}

\begin{split}

1+3+\cdots +(2(n+1)-1) &= (1+3+\cdots +(2n-1) ) +(2(n+1)-1)\\

& =n^2+(2n+1) \quad \textrm{by } P(n) \textrm{ and basic algebra}\\

& =(n+1)^2 \quad \square

\end{split}

\end{equation*}

3.7.4.3.

Answer.

Proof:

-

Basis: We note that the proposition is true when \(n=1\text{:}\) \(\sum_{k=1}^{1} k^2 =1= \frac{1(2)(3)}{6}\text{.}\)

-

Induction: Assume that the proposition is true for some \(n \geq 1\text{.}\) This is the induction hypothesis.\begin{equation*} \begin{split} \sum_{k=1}^{n+1} k^2 &=\sum_{k=1}^n k^2+(n+1)^2\\ &=\frac{n(n+1)(2n+1)}{6}+(n+1)^2 \qquad \textrm{by the induction hypothesis} \\ &=\frac{(n+1)(2n^2+7n+6)}{6}\\ &=\frac{(n+1)(n+2)(2n+3)}{6}\qquad \square \end{split} \end{equation*}Therefore, the truth of the proposition for \(n\) implies the truth of the proposition for \(n+1\text{.}\)

3.7.4.5.

Solution.

Induction: Assume that for some \(n\geqslant 1\text{,}\) the formula is true.

Then:

\begin{equation*}

\begin{split}

\frac{1}{(1\cdot 2)}+\cdots +\frac{1}{n(n+1)} +\frac{1}{(n+1)(n+2)} &=\frac{n}{n+1}+\frac{1}{(n+1)(n+2)}\\

&=\frac{(n+2)n}{(n+1)(n+2)}+\frac{1}{(n+1)(n+2)}\\

&=\frac{(n+1)^2}{(n+1)(n+2)}\\

&=\frac{n+1}{n+2} \quad \square\\

\end{split}

\end{equation*}

3.7.4.7.

Answer.

Let \(A_n\) be the set of strings of zeros and ones of length \(n\) (we assume that \(\lvert A_n \rvert =2^n\) is known). Let \(E_n\) be the set of the “even” strings, and \(E_{n}^{c}=\) the odd strings. The problem is to prove that for \(n\geqslant 1\text{,}\) \(\lvert E_n \rvert =2^{n-1}\text{.}\) Clearly, \(\lvert E_1\rvert =1\text{,}\) and, if for some \(n\geqslant 1, \lvert E_n\rvert =2^{n-1}\text{,}\) it follows that \(\lvert E_{n+1}\rvert =2^n\) by the following reasoning.

We partition \(E_{n+1}\) according to the first bit: \(E_{n+1}=\{1s\mid s \in E_n^c \}\cup \{ 0s \mid s \in E_n\}\)

Since \(\{1s\mid s \in E_n^c\}\) and \(\{0s \mid s \in E_n\}\) are disjoint, we can apply the addition law. Therefore,

\begin{equation*}

\begin{split}

\quad \lvert E_{n+1}\rvert & =\lvert E_n^c \rvert +\lvert E_n \rvert \\

& =2^{n-1}+ (2^n-2^{n-1}) =2^n.\quad \square

\end{split}

\end{equation*}

3.7.4.9.

Solution.

Assume that for \(n\) persons \((n\geqslant 1),\frac{(n-1)n}{2}\) handshakes take place. If one more person enters the room, he or she will shake hands with \(n\) people,

\begin{equation*}

\begin{split}

\frac{(n-1)n}{2}+n & =\frac{n^2-n+2n}{2}\\

&=\frac{n^2+n}{2}=\frac{n(n+1)}{2}\\

&=\frac{((n+1)-1)(n+1)}{2} \square

\end{split}

\end{equation*}

Also, for \(n=1\text{,}\) there are no handshakes, which matches the conjectured formula:

\begin{equation*}

\frac{(1-1)(1)}{2}=0 \quad \square.

\end{equation*}

3.7.4.11.

Solution.

Basis: \(a_1 + a_2 + a_3\) may be evaluated only two ways. Since + is associative, \((a_1 + a_2) + a_3 = a_1 + (a_2 + a_3)\text{.}\) Hence, \(p(3)\) is true.

Induction: Assume that for some \(n\geq 3\text{,}\) \(p(3), p(4), \dots , p(n)\) are all true. Now consider the sum \(a_1 + a_2 + \cdots + a_n + a_{n+1}\text{.}\) Any of the \(n\) additions in this expression can be applied last. If the \(j\)th addition is applied last, we have \(c_j=(a_1+a_2+\cdots +a_j)+(a_{j+1}+\cdots +a_{n+1})\text{.}\) No matter how the expression to the left and right of the \(j^{\text{th}}\) addition are evaluated, the result will always be the same by the induction hypothesis, specifically \(p(j)\) and \(p(n+1-j)\text{.}\) We now can prove that \(c_1=c_2=\cdots =c_n\text{.}\) If \(i < j\text{,}\)

\begin{equation*}

\begin{split}

c_i &=(a_1+a_2+\cdots +a_i)+(a_{i+1}+\cdots +a_{n+1})\\

&=(a_1+a_2+\cdots +a_i)+(a_{i+1}+\cdots +a_j)+(a_{j+1}+\cdots +a_{n+1})\\

&=((a_1+a_2+\cdots +a_i)+(a_{i+1}+\cdots +a_j))+(a_{j+1}+\cdots +a_{n+1})\\

&=(a_1+a_2+\cdots +a_j)+(a_{j+1}+\cdots +a_{n+1})\\

&=c_j \quad\quad \square

\end{split}

\end{equation*}

3.7.4.12.

3.7.4.13.

Solution.

For \(m\geqslant 1\text{,}\) let \(p(m)\textrm{ be } x^{n+m}=x^nx^m\) for all \(n\geqslant 1\text{.}\) The basis for this proof follows directly from the basis for the definition of exponentiation.

Induction: Assume that for some \(m\geq 1\text{,}\) \(p(m)\) is true. Then

\begin{equation*}

\begin{split}

x^{n+(m+1)} & =x^{(n+m)+1}\quad \textrm{by associativity of integer addition}\\

&=x^{n+m}x^1 \quad \textrm{ by recursive definition}\\

&=x^nx^mx^1 \quad \textrm{induction hypothesis}\\

&=x^nx^{m+1}\quad \textrm{recursive definition}\quad \square\\

\end{split}

\end{equation*}

3.8 Quantifiers

3.8.5 Exercises

3.8.5.1.

3.8.5.3.

Answer.

-

There is a book with a cover that is not blue.

-

Every mathematics book that is published in the United States has a blue cover.

-

There exists a mathematics book with a cover that is not blue.

-

There exists a book that appears in the bibliography of every mathematics book.

-

\(\displaystyle (\forall x)(B(x)\to M(x))\)

-

\(\displaystyle (\exists x)(M(x)\land \neg U(x))\)

-

\(\displaystyle (\exists x)((\forall y)(\neg R(x,y))\)

3.8.5.5.

3.8.5.7.

3.8.5.9.

3.8.5.10.

3.8.5.11.

3.9 A Review of Methods of Proof

3.9.3 Exercises

3.9.3.1.

Answer.

The given statement can be written in if \(\dots\) , then \(\dots\) format as: If \(x\) and \(y\) are two odd positive integers, then \(x+y\) is an even integer.

Proof: Assume \(x\) and \(y\) are two positive odd integers. It can be shown that \(x+y=2 \cdot \textrm{(some positive integer)}\text{.}\)

Then,

\begin{equation*}

x+y=(2m+1)+(2n+1)=2((m+n)+1)=2\cdot\textrm{(some positive integer)}

\end{equation*}

Therefore, \(x+y\) is an even positive integer. \(\square\)

3.9.3.3.

Answer.

Proof: (Indirect) Assume to the contrary, that \(\sqrt{2}\) is a rational number. Then there exists \(p,q\in \mathbb{Z}, (q\neq 0)\) where \(\frac{p}{q}=\sqrt{2}\) and where \(\frac{p}{q}\) is in lowest terms, that is, \(p\) and \(q\) have no common factor other than 1.

\begin{equation*}

\begin{split}

\frac{p}{q}=\sqrt{2} & \Rightarrow \frac{p^2}{q^2}=2\\

&\Rightarrow p^2=2 q^2 \\

&\Rightarrow p^2 \textrm{ is an even integer}\\

&\Rightarrow p \textrm{ is an even integer (see Exercise 2)}\\

&\Rightarrow \textrm{4 is a factor of }p^2\\

&\Rightarrow q^2 \textrm{ is even}\\

&\Rightarrow q \textrm{ is even}\\

\end{split}

\end{equation*}

3.9.3.5.

Answer.

Proof: (Indirect) Assume \(x,y\in \mathbb{R}\) and \(x+y\leqslant 1\text{.}\) Assume to the contrary that \(\left(x\leqslant \frac{1}{2}\textrm{ or } y\leqslant \frac{1}{2}\right)\) is false, which is equivalent to \(x>\frac{1}{2}\textrm{ and } y>\frac{1}{2}\text{.}\) Hence \(x+y>\frac{1}{2}+\frac{1}{2}=1\text{.}\) This contradicts the assumption that \(x+y\leqslant 1\text{.}\) \(\square\)

4 More on Sets

4.1 Methods of Proof for Sets

4.1.5 Exercises

4.1.5.1.

Answer.

-

Assume that \(x\in A\) (condition of the conditional conclusion \(A \subseteq C\)). Since \(A \subseteq B\text{,}\) \(x\in B\) by the definition of \(\subseteq\text{.}\) \(B\subseteq C\) and \(x\in B\) implies that \(x\in C\text{.}\) Therefore, if \(x\in A\text{,}\) then \(x\in C\text{.}\) \(\square\)

-

(Proof that \(A -B \subseteq A\cap B^c\)) Let \(x\) be in \(A - B\text{.}\) Therefore, x is in \(A\text{,}\) but it is not in B; that is,\(\text{ }x \in A\) and \(x \in B^c \Rightarrow x\in A\cap B^c\text{.}\) \(\square\)

-

\((\Rightarrow )\)Assume that \(A \subseteq B\) and \(A \subseteq C\text{.}\) Let \(x\in A\text{.}\) By the two premises,\(x\in B\) and \(x\in C\text{.}\) Therefore, by the definition of intersection, \(x\in B\cap C\text{.}\) \(\square\)

-

\((\Rightarrow )\)(Indirect) Assume that \(B^c\) is not a subset of \(A^c\) . Therefore, there exists \(x\in B^c\) that does not belong to \(A^c\text{.}\) \(x \notin A^c \Rightarrow x \in A\text{.}\) Therefore, \(x\in A\) and \(x\notin B\text{,}\) a contradiction to the assumption that \(A\subseteq B\text{.}\) \(\square\)

-

There are two cases to consider. The first is when \(C\) is empty. Then the conclusion follows since both Cartesian products are empty.If \(C\) isn’t empty, we have two subcases, if \(A\) is empty, \(A\times C = \emptyset\text{,}\) which is a subset of every set. Finally, the interesting subcase is when \(A\) is not empty. Now we pick any pair \((a,c) \in A\times C\text{.}\) This means that \(a\) is in \(A\) and \(c\) is in \(C\text{.}\) Since \(A\) is a subset of \(B\text{,}\) \(a\) is in \(B\) and so \((a,c) \in B \times C\text{.}\) Therefore \(A\times C \subseteq B\times C\text{.}\) \(\square\)

4.1.5.3.

Answer.

-

If \(A = \mathbb{Z}\) and \(B=\emptyset\text{,}\) \(A - B = \pmb{\mathbb{Z}}\text{,}\) while \(B - A = \emptyset\text{.}\)

-

If \(A=\{0\}\) and \(B = \{1\}\text{,}\) \((0,1) \in A \times B\text{,}\) but \((0, 1)\) is not in \(B\times A\text{.}\)

-

If \(A = \{1\}\text{,}\) \(B = \{1\}\text{,}\) and \(C =\emptyset\text{,}\) then the left hand side of the identity is \(\{1\}\) while the right hand side is the empty set. Another example is \(A = \{1,2\}\text{,}\) \(B = \{1\}\text{,}\) and \(C =\{2\}.\)

4.1.5.5.

Solution.

Proof: Let \(p(n)\) be

\begin{equation*}

A\cap (B_1\cup B_2\cup \cdots \cup B_n)=(A\cap B_1)\cup (A\cap B_2)\cup \cdots \cup (A\cap B_n)\text{.}

\end{equation*}

Basis: We must show that \(p(2) : A \cap (B_1 \cup B_2 )=(A\cap B_1) \cup (A\cap B_2)\) is true. This was done by several methods in section 4.1.

Induction: Assume for some \(n\geq 2\) that \(p(n)\) is true. Then

\begin{equation*}

\begin{split}

A\cap (B_1\cup B_2\cup \cdots \cup B_{n+1})&=A\cap ((B_1\cup B_2\cup \cdots \cup B_n)\cup B_{n+1})\\

&=(A \cap (B_1\cup B_2\cup \cdots \cup B_n))\cup (A\cap B_{n+1}) \quad \textrm{by } p(2)\\

&=((A\cap B_1)\cup \cdots \cup (A\cap B_n))\cup (A\cap B_{n+1})\quad \textrm{by the induction hypothesis}\\

&=(A\cap B_1)\cup \cdots \cup (A\cap B_n)\cup (A\cap B_{n+1})\quad \square\\

\end{split}

\end{equation*}

4.1.5.6.

Answer.

The statement is false. The sets \(A=\{1,2\}\text{,}\) \(B=\{2,3\}\) and \(C=\{3,4\}\) provide a counterexample. Looking ahead to Chapter 6, we would say that the relation of being non-disjoint is not transitive 6.3.3

4.2 Laws of Set Theory

4.2.4 Exercises

4.2.4.1.

Answer.

-

\begin{equation*} \begin{array}{ccccccc} A & B &A^c & B^c & A\cup B & (A\cup B)^c &A^c\cap B^c \\ \hline 0 & 0 &1 & 1 & 0 & 1 & 1 \\ 0 & 1 &1 & 0 & 1 & 0 & 0 \\ 1 & 0 & 0 & 1 & 1 & 0 & 0 \\ 1 & 1 & 0 & 0 & 1 & 0 & 0 \\ \end{array} \end{equation*}The last two columns are the same so the two sets must be equal.

-

\begin{equation*} \begin{split} x\in A\cup A & \Rightarrow (x\in A) \lor (x\in A)\quad\textrm{by the definition of } \cap\\ &\Rightarrow x\in A \quad\textrm{ by the idempotent law of logic} \end{split} \end{equation*}Therefore, \(A\cup A\subseteq A\text{.}\)\begin{equation*} \begin{split} x\in A &\Rightarrow (x\in A) \lor (x\in A) \quad \textrm{ by conjunctive addition}\\ & \Rightarrow x\in A\cup A\\ \end{split} \end{equation*}Therefore, \(A \subseteq A\cup A\) and so we have \(A\cup A=A\text{.}\)

4.2.4.3.

Answer.

For all parts of this exercise, a reason should be supplied for each step. We have supplied reasons only for part a and left them out of the other parts to give you further practice.

-

\begin{equation*} \begin{split} A \cup (B-A)&=A\cup (B \cap A^c) \textrm{ by Exercise 1 of Section 4.1}\\ & =(A\cup B)\cap (A\cup A^c) \textrm{ by the distributive law}\\ &=(A\cup B)\cap U \textrm{ by the null law}\\ &=(A\cup B) \textrm{ by the identity law } \square \end{split}\text{.} \end{equation*}

-

\begin{equation*} \begin{split} A - B & = A \cap B ^c\\ & =B^c\cap A\\ &=B^c\cap (A^c)^c\\ &=B^c-A^c\\ \end{split}\text{.} \end{equation*}

-

Select any element, \(x \in A\cap C\text{.}\) One such element exists since \(A\cap C\) is not empty.\begin{equation*} \begin{split} x\in A\cap C\ &\Rightarrow x\in A \land x\in C \\ & \Rightarrow x\in B \land x\in C \\ & \Rightarrow x\in B\cap C \\ & \Rightarrow B\cap C \neq \emptyset \quad \square \\ \end{split}\text{.} \end{equation*}Therefore,

-

\begin{equation*} \begin{split} A\cap (B-C) &=A\cap (B\cap C^c) \\ & = (A\cap B\cap A^c)\cup (A\cap B\cap C^c) \\ & =(A\cap B)\cap (A^c\cup C^c) \\ & =(A\cap B)\cap (A\cup C)^c \\ & =(A-B)\cap (A-C) \quad \square\\ \end{split}\text{.} \end{equation*}

-

\begin{equation*} \begin{split} A-(B\cup C)& = A\cap (B\cup C)^c\\ & =A\cap (B^c\cap C^c)\\ & =(A\cap B^c)\cap (A\cap C^c)\\ & =(A-B)\cap (A-C) \quad \square\\ \end{split}\text{.} \end{equation*}

4.2.4.5. Hierarchy of Set Operations.

4.3 Minsets

4.3.3 Exercises

4.3.3.1.

4.3.3.3.

4.3.3.5.

4.3.3.7.

Answer.

Let \(a\in A\text{.}\) For each \(i\text{,}\) \(a\in B_i\text{,}\) or \(a\in B_i{}^c\text{,}\) since \(B_i\cup B_i{}^c=A\) by the complement law. Let \(D_i=B_i\) if \(a\in B_i\text{,}\) and \(D_i=B_i{}^c\) otherwise. Since \(a\) is in each \(D_i\text{,}\) it must be in the minset \(D_1\cap D_2 \cdots \cap D_n\text{.}\) Now consider two different minsets \(M_1= D_1\cap D_2\cdots \cap D_n\text{,}\) and \(M_2=G_1\cap G_2\cdots \cap G_n\text{,}\) where each \(D_i\) and \(G_i\) is either \(B_i\) or \(B_i{}^c\text{.}\) Since these minsets are not equal, \(D_i\neq G_i\text{,}\) for some \(i\text{.}\) Therefore, \(M_1\cap M_2=D_1\cap D_2 \cdots \cap D_n\cap G_1\cap G_2\cdots \cap G_n=\emptyset\text{,}\) since two of the sets in the intersection are disjoint. Since every element of \(A\) is in a minset and the minsets are disjoint, the nonempty minsets must form a partition of \(A\text{.}\) \(\square\)

4.4 The Duality Principle

4.4.2 Exercises

4.4.2.1.

4.4.2.3.

4.4.2.5.

Answer.

The maxsets are:

-

\(\displaystyle B_1\cup B_2=\{1,2,3,5\}\)

-

\(\displaystyle B_1\cup B_2{}^c=\{1,3,4,5,6\}\)

-

\(\displaystyle B_1{}^c\cup B_2=\{1,2,3,4,6\}\)

-

\(\displaystyle B_1{}^c\cup B_2{}^c=\{2,4,5,6\}\)

They do not form a partition of \(A\) since it is not true that the intersection of any two of them is empty. A set is said to be in maxset normal form when it is expressed as the intersection of distinct nonempty maxsets or it is the universal set \(U\text{.}\)

5 Introduction to Matrix Algebra

5.1 Basic Definitions and Operations

5.1.4 Exercises

5.1.4.1.

Answer.

For parts c, d and i of this exercise, only a verification is needed. Here, we supply the result that will appear on both sides of the equality.

-

\(\displaystyle AB=\left( \begin{array}{cc} -3 &6 \\ 9 & -13 \\ \end{array} \right) \quad BA=\left( \begin{array}{cc} 2 & 3 \\ -7 & -18 \\ \end{array} \right)\)

-

\(\displaystyle \left( \begin{array}{cc} 1 & 0 \\ 5 & -2 \\ \end{array} \right)\)

-

\(\displaystyle \left( \begin{array}{cc} 3 & 0 \\ 15 & -6 \\ \end{array} \right)\)

-

\(\displaystyle \left( \begin{array}{ccc} 18 & -15 & 15 \\ -39 & 35 & -35 \\ \end{array} \right)\)

-

\(\displaystyle \left( \begin{array}{ccc} -12 & 7 & -7 \\ 21 & -6 & 6 \\ \end{array} \right)\)

-

\(\displaystyle B+0=B\)

-

\(\displaystyle \left( \begin{array}{cc} 0 & 0 \\ 0 & 0 \\ \end{array} \right)\)

-

\(\displaystyle \left( \begin{array}{cc} 0 & 0 \\ 0 & 0 \\ \end{array} \right)\)

-

\(\displaystyle \left( \begin{array}{cc} 5 & -5 \\ 10 & 15 \\ \end{array} \right)\)

5.1.4.3.

5.1.4.5.

5.1.4.7.

Answer.

-

\(Ax=\left( \begin{array}{c} 2x_1+1x_2 \\ 1x_1-1x_2 \\ \end{array} \right)\) equals \(\left( \begin{array}{c} 3 \\ 1 \\ \end{array} \right)\) if and only if both of the equalities \(2x_1+x_2=3 \textrm{ and } x_1-x_2=1\) are true.

-

(i) \(A=\left( \begin{array}{cc} 2 & -1 \\ 1 & 1 \\ \end{array} \right)\) \(x=\left( \begin{array}{c} x_1 \\ x_2 \\ \end{array} \right)\) \(B=\left( \begin{array}{c} 4 \\ 0 \\ \end{array} \right)\)

-

\(A=\left( \begin{array}{ccc} 1 & 1 & 2 \\ 1 & 2 & -1 \\ 1 & 3 & 1 \\ \end{array} \right)\) \(x=\left( \begin{array}{c} x_1 \\ x_2 \\ x_3 \\ \end{array} \right)\) \(B=\left( \begin{array}{c} 1 \\ -1 \\ 5 \\ \end{array} \right)\)

-

\(A=\left( \begin{array}{ccc} 1 & 1 & 0 \\ 0 & 1 & 0 \\ 1 & 0 & 3 \\ \end{array} \right)\) \(x=\left( \begin{array}{c} x_1 \\ x_2 \\ x_3 \\ \end{array} \right)\) \(B=\left( \begin{array}{c} 3 \\ 5 \\ 6 \\ \end{array} \right)\)

5.2 Special Types of Matrices

5.2.3 Exercises

5.2.3.1.

Answer.

-

\(\displaystyle \left( \begin{array}{cc} -1/5 & 3/5 \\ 2/5 & -1/5 \\ \end{array} \right)\)

-

No inverse exists.

-

\(\displaystyle \left( \begin{array}{cc} 1 & 3 \\ 0 & 1 \\ \end{array} \right)\)

-

\(\displaystyle A^{-1}=A\)

-

\(\displaystyle \left( \begin{array}{ccc} 1/3 & 0 & 0 \\ 0 & 2 & 0 \\ 0 & 0 & -1/5 \\ \end{array} \right)\)

5.2.3.3.

Solution.

The object here is to prove a formula for the inverse of \(AB\text{,}\) which is denoted \((AB)^(-1)\text{.}\) If \(A^(-1) B^(-1)\) inverts \(AB\) (which it does) then the formula is proven.

\begin{equation*}

\begin{split}

\left(B^{-1}A^{-1}\right)(AB)&=\left(B^{-1}\right)\left(A^{-1}(AB)\right)\\

&= \left(B^{-1}\right) \left(\left(A^{-1} A\right) B\right)\\

&=(\left(B^{-1}\right)I B )\\

&=B^{-1}(B)\\

&=I

\end{split}

\end{equation*}

Similarly, \((AB)\left(B^{-1}A^{-1}\right)=I\text{.}\)

By Theorem 5.2.6, \(B^{-1}A^{-1}\) is the only inverse of \(AB\text{.}\) If we tried to invert \(AB\) with \(A^{-1}B^{-1}\text{,}\) we would be unsuccessful since we cannot rearrange the order of the matrices.

5.2.3.5. Linearity of Determinants.

Solution.

-

Let \(A=\left( \begin{array}{cc} a & b \\ c & d \\ \end{array} \right)\) and \(B=\left( \begin{array}{cc} x & y \\ z & w \\ \end{array} \right)\text{.}\)\begin{equation*} \begin{split} \det(A B) & =\det \left( \begin{array}{cc} a x+b z & a y+b w \\ c x+d z & c y+d w \\ \end{array} \right)\\ &=a d w x-a d y z-b c w x+b c y z \quad \text{four terms cancel}\\ &=(ad-bc)x w - (ad -bc)y z\\ &=(ad-bc)(x w - y z)\\ &=(\det A)(\det B) \end{split}\text{.} \end{equation*}

-

\(1=\det I=\det \left(AA^{-1}\right)=\det A\text{ }\det A^{-1}\text{.}\) Now solve for \(\det A^{-1}\text{.}\)

-

\(\det A=1\cdot 1 - 3 \cdot 2 =-5\) while \(\det A^{-1}= \frac{1}{5} \cdot \frac{1}{5} - \frac{3}{5} \cdot \frac{2}{5} = -\frac{1}{5}\text{.}\)

5.2.3.7.

Answer.

Basis: \((n=1): \det A^1=\det A =(\det A )^1\)

\begin{equation*}

\begin{split}

\det A^{n+1} & =\det \left(A^nA\right)\quad \textrm{ by the definition of exponents}\\

&=\det \left(A^n\right)\det (A)\quad \textrm{ by exercise 5} \\

&=(det A)^n(\det A)\quad \textrm{ by the induction hypothesis }\\

&=(\det A)^{n+1}

\end{split}

\end{equation*}

5.2.3.9.

Answer.

-

Assume \(A=B D B^{-1}\)\begin{equation*} \begin{split} A^{m+1} &=A^mA\\ &=(B D^m B^{-1})(BDB^{-1})\quad \textrm{ by the induction hypothesis} \\ &=(BD^m(B^{-1} B ) (DB^{-1}) \quad \textrm{ by associativity} \\ &=B D^m D B^{-1} \quad \textrm{ by the definition of inverse}\\ &=B D^{m+1} B^{-1} \quad \square \end{split} \end{equation*}

-

\(\displaystyle A^{10}=BD^{10}B^{-1}= \left( \begin{array}{cc} -9206 & 15345 \\ -6138 & 10231 \\ \end{array} \right)\)

5.3 Laws of Matrix Algebra

5.3.3 Exercises

5.3.3.1.

Answer.

-

Let \(A\text{,}\) \(B\text{,}\) and \(C\) be \(m\) by \(n\) matrices. Then \(A+(B+C)=(A+B)+C\text{.}\)

-

Let \(A\) and \(B\) be \(m\) by \(n\) matrices, and let \(c\in \mathbb{R}\text{.}\) Then \(c(A+B)=cA+cB\text{,}\)

-

Let \(A\) be an \(m\) by \(n\) matrix, and let \(c_1,c_2\in \mathbb{R}\text{.}\) Then \(\left(c_1+c_2\right)A=c_1A+c_2A\text{.}\)

-

Let \(A\) be an \(m\) by \(n\) matrix, and let \(c_1,c_2\in \mathbb{R}\text{.}\) Then \(c_1\left(c_2A\right)=\left(c_1c_2\right)A\)

-

Let \(\pmb{0}\) be the zero matrix, of size \(m \textrm{ by } n\text{,}\) and let \(A\) be a matrix of size \(n \textrm{ by } r\text{.}\) Then \(\pmb{0}A=\pmb{0}=\textrm{ the } m \textrm{ by } r \textrm{ zero matrix}\text{.}\)

-

Let \(A\) be an \(m \textrm{ by } n\) matrix, and \(0 = \textrm{ the number zero}\text{.}\) Then \(0A=0=\textrm{ the } m \textrm{ by } n \textrm{ zero matrix}\text{.}\)

-

Let \(A\) be an \(m \textrm{ by } n\) matrix, and let \(\pmb{0}\) be the \(m \textrm{ by } n\) zero matrix. Then \(A+\pmb{0}=A\text{.}\)

-

Let \(A\) be an \(m \textrm{ by } n\) matrix. Then \(A+(- 1)A=\pmb{0}\text{,}\) where \(\pmb{0}\) is the \(m \textrm{ by } n\) zero matrix.

-

Let \(A\text{,}\) \(B\text{,}\) and \(C\) be \(m \textrm{ by } n\text{,}\) \(n \textrm{ by } r\text{,}\) and \(n \textrm{ by } r\) matrices respectively. Then \(A(B+C)=AB+AC\text{.}\)

-

Let \(A\text{,}\) \(B\text{,}\) and \(C\) be \(m \textrm{ by } n\text{,}\) \(r \textrm{ by } m\text{,}\) and \(r \textrm{ by } m\) matrices respectively. Then \((B+C)A=BA+CA\text{.}\)

-

Let \(A\text{,}\) \(B\text{,}\) and \(C\) be \(m \textrm{ by } n\text{,}\) \(n \textrm{ by } r\text{,}\) and \(r \textrm{ by } p\) matrices respectively. Then \(A(BC)=(AB)C\text{.}\)

-

Let \(A\) be an \(m \textrm{ by } n\) matrix, \(I_m\) the \(m \textrm{ by } m\) identity matrix, and \(I_n\) the \(n \textrm{ by } n\) identity matrix. Then \(I_mA=AI_n=A\)

-

Let \(A\) be an \(n \textrm{ by } n\) matrix. Then if \(A^{-1}\) exists, \(\left(A^{-1}\right)^{-1}=A\text{.}\)

-

Let \(A\) and \(B\) be \(n \textrm{ by } n\) matrices. Then if \(A^{-1}\) and \(B^{-1}\) exist, \((AB)^{-1}=B^{-1}A^{-1}\text{.}\)

5.3.3.3.

Answer.

-

\(\displaystyle AB+AC=\left( \begin{array}{ccc} 21 & 5 & 22 \\ -9 & 0 & -6 \\ \end{array} \right)\)

-

\(\displaystyle A^{-1}=\left( \begin{array}{cc} 1 & 2 \\ 0 & -1 \\ \end{array} \right)=A\)

-

\(A(B+C)=A B+ B C\text{,}\) which is given in part (a).

-

\(\left(A^2\right)^{-1}=(AA)^{-1}=(A^{-1}A)=I^{-1}=I \quad \) by part c

5.4 Matrix Oddities

5.4.2 Exercises

5.4.2.1.

Answer.

In elementary algebra (the algebra of real numbers), each of the given oddities does not exist.

-

\(AB\) may be different from \(BA\text{.}\) Not so in elementary algebra, since \(a b = b a\) by the commutative law of multiplication.

-

There exist matrices \(A\) and \(B\) such that \(AB = \pmb{0}\text{,}\) yet \(A\neq \pmb{0}\)and \(B\neq \pmb{0}\text{.}\) In elementary algebra, the only way \(ab = 0\) is if either \(a\) or \(b\) is zero. There are no exceptions.

-

There exist matrices \(A\text{,}\) \(A\neq \pmb{0}\text{,}\) yet \(A^2=\pmb{0}\text{.}\) In elementary algebra, \(a^2=0\Leftrightarrow a=0\text{.}\)

-

There exist matrices \(A^2=A\text{.}\) where \(A\neq \pmb{0}\) and \(A\neq I\text{.}\) In elementary algebra, \(a^2=a\Leftrightarrow a=0 \textrm{ or } 1\text{.}\)

-

There exist matrices \(A\) where \(A^2=I\) but \(A\neq I\) and \(A\neq -I\text{.}\) In elementary algebra, \(a^2=1\Leftrightarrow a=1\textrm{ or }-1\text{.}\)

5.4.2.3.

5.4.2.5.

Answer.

-

\(A^{-1}=\left( \begin{array}{cc} 1/3 & 1/3 \\ 1/3 & -2/3 \\ \end{array} \right)\) \(x_1=4/3\text{,}\) and \(x_2=1/3\)

-

\(A^{-1}=\left( \begin{array}{cc} 1 & -1 \\ 1 & -2 \\ \end{array} \right)\) \(x_1=4\text{,}\) and \(x_2=4\)

-

\(A^{-1}=\left( \begin{array}{cc} 1/3 & 1/3 \\ 1/3 & -2/3 \\ \end{array} \right)\) \(x_1=2/3\text{,}\) and \(x_2=-1/3\)

-

\(A^{-1}=\left( \begin{array}{cc} 1/3 & 1/3 \\ 1/3 & -2/3 \\ \end{array} \right)\) \(x_1=0\text{,}\) and \(x_2=1\)

-

The matrix of coefficients for this system has a zero determinant; therefore, it has no inverse. The system cannot be solved by this method. In fact, the system has no solution.

6 Relations

6.1 Basic Definitions

6.1.4 Exercises

6.1.4.1.

6.1.4.3.

6.1.4.5.

Answer.

-

When \(n=3\text{,}\) there are 27 pairs in the relation.

-

Imagine building a pair of disjoint subsets of \(S\text{.}\) For each element of \(S\) there are three places that it can go: into the first set of the ordered pair, into the second set, or into neither set. Therefore the number of pairs in the relation is \(3^n\text{,}\) by the product rule.

6.1.4.7.

Solution.

Assume \((x,y)\in r_1r_3\text{.}\) This implies that there exist \(z \in A\) such that \((x,z)\in r_1\) and \((z,y)\in r_3\text{.}\) We are given that \(r_1\subseteq r_2\text{,}\) which implies that \((x,z)\in r_2\text{.}\) Combining this with \((z,y)\in r_3\) implies that \((x,y)\in r_2r_3\text{,}\) which proves that \(r_1r_3\subseteq r_2r_3\text{.}\)

6.2 Graphs of Relations on a Set

6.2.2 Exercises

6.2.2.1.

6.2.2.3.

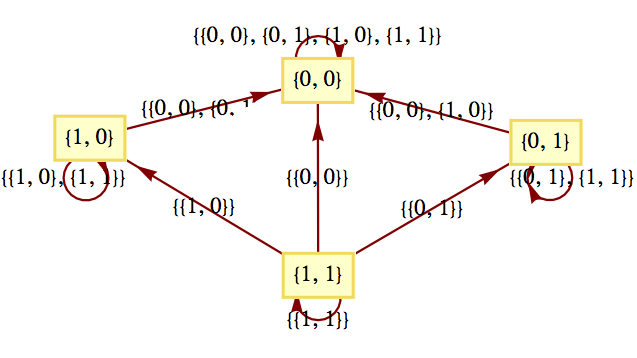

Answer.

6.3 Properties of Relations

6.3.3 Equivalence Relations

Checkpoint 6.3.16.

Solution.

\begin{equation*}

a \equiv_n b \Rightarrow n \mid (a-b) \Rightarrow a-b=n q_1, \quad q_1 \in \mathbb{Z}

\end{equation*}

\begin{equation*}

b\equiv_n c \Rightarrow n \mid (b-c) \Rightarrow b-c=n q_2, \quad q_2 \in \mathbb{Z}

\end{equation*}

Therefore

\begin{equation*}

a-c=(a-b)+(b-c)=n(q_1+q_2) \Rightarrow a \equiv_n c

\end{equation*}

6.3.4 Exercises

6.3.4.1.

Answer.

-

“Divides” is reflexive because, if \(i\) is any positive integer, \(i\cdot 1 = i\) and so \(i \mid i\)

-

“Divides” is antisymmetric. Suppose \(i\) and \(j\) are two distinct positive integers. One of them has to be less than the other, so we will assume \(i \lt j\text{.}\) If \(i \mid j\text{,}\) then for some positive integer \(k\text{,}\) where \(k \ge 1\) we have \(i \cdot k = j\text{.}\) But this means that \(j \cdot \frac{1}{k}=i\) and since \(\frac{1}{k}\) is not a positive integer, \(j \nmid i\text{.}\)

-

“Divides” is transitive. If \(h\text{,}\) \(i\) and \(j\) are positive integers such that \(h \mid i\) and \(i \mid j\text{,}\) there must be two positive integers \(k_1\) and \(k_2\) such that \(h \cdot k_1 =i\) and \(i \cdot k_2 = j\text{.}\) Combining these equalities we get \(h \cdot (k_1 \cdot k_2) = j\) and so \(h \mid j\text{.}\)

6.3.4.3.

Answer.

| Part | reflexive? | symmetric? | antisymmetric? | transitive? |

|---|---|---|---|---|

| i | yes | no | no | yes |

| ii | yes | no | yes | yes |

| iii | no | no | no | no |

| iv | no | yes | yes | yes |

| v | yes | yes | no | yes |

| vi | yes | no | yes | yes |

| vii | no | no | no | no |

-

See Table 6.3.20

-

Graphs ii and vi show partial ordering relations. Graph v is of an equivalence relation.

6.3.4.5.

Answer.

-

No, since \(\mid 1-1\mid =0\neq 2\text{,}\) for example

-

No, since \(\mid 2-4\mid =2\) and \(\mid 4-6\mid =2\text{,}\) but \(\mid 2-6\mid =4\neq 2\text{,}\) for example.

-

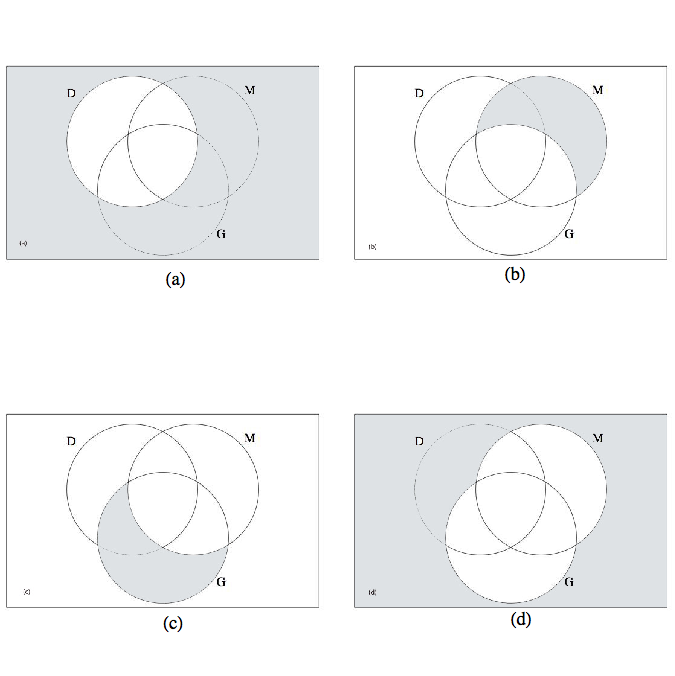

See Figure 6.3.21

Solution to number 5c of section 6.3

6.3.4.7.

Answer.

Let \(a\) be any element of \(A\text{.}\) \(a\in [a]\) since \(r\) is reflexive, so each element of \(A\) is in some equivalence class. Therefore, the union of all equivalence classes equals \(A\text{.}\) Next we show that any two equivalence classes are either identical or disjoint and we are done. Let \([a]\) and \([b]\) be two equivalence classes, and assume that \([a]\cap [b]\neq \emptyset\text{.}\) We want to show that \([a]=[b]\text{.}\) To show that \([a]\subseteq [b]\text{,}\) let \(x\in [a]\text{.}\) \(x\in [a] \Rightarrow a r x \text{.}\) Also, there exists an element, \(y\text{,}\) of \(A\) that is in the intersection of \([a]\) and \([b]\) by our assumption. Therefore,

\begin{equation*}

\begin{split}

a r y \land b r y &\Rightarrow a r y \land y r b \quad r\textrm{ is symmetric}\\

&\Rightarrow a r b \quad \textrm{ transitivity of }r \\

\end{split}

\end{equation*}

Next,

\begin{equation*}

\begin{split}

a r x \land a r b &\Rightarrow x r a \land a r b\\

&\Rightarrow x r b\\

&\Rightarrow b r x\\

& \Rightarrow x \in [b]\\

\end{split}

\end{equation*}

6.3.4.9.

6.3.4.11.

6.4 Matrices of Relations

6.4.3 Exercises

6.4.3.1.

Answer.

-

\(\begin{array}{cc} & \begin{array}{ccc} 4 & 5 & 6 \\ \end{array} \\ \begin{array}{c} 1 \\ 2 \\ 3 \\ 4 \\ \end{array} & \left( \begin{array}{ccc} 0 & 0 & 0 \\ 1 & 0 & 0 \\ 0 & 1 & 0 \\ 0 & 0 & 1 \\ \end{array} \right) \\ \end{array}\) and \(\begin{array}{cc} & \begin{array}{ccc} 6 & 7 & 8 \\ \end{array} \\ \begin{array}{c} 4 \\ 5 \\ 6 \\ \end{array} & \left( \begin{array}{ccc} 0 & 0 & 0 \\ 1 & 0 & 0 \\ 0 & 1 & 0 \\ \end{array} \right) \\ \end{array}\)

-

\(\displaystyle r_1r_2 =\{(3,6),(4,7)\}\)

-

\(\displaystyle \begin{array}{cc} & \begin{array}{ccc} 6 & 7 & 8 \\ \end{array} \\ \begin{array}{c} 1 \\ 2 \\ 3 \\ 4 \\ \end{array} & \left( \begin{array}{ccc} 0 & 0 & 0 \\ 0 & 0 & 0 \\ 1 & 0 & 0 \\ 0 & 1 & 0 \\ \end{array} \right) \\ \end{array}\)

6.4.3.3.

6.4.3.5.

Answer.

The graph of a relation on three elements has nine entries. The three entries in the diagonal must be 1 in order for the relation to be reflexive. In addition, to make the relation symmetric, the off-diaginal entries can be paired up so that they are equal. For example if the entry in row 1 column 2 is equal to 1, the entry in row 2 column 1 must also be 1. This means that three entries, the ones above the diagonal determine the whole matrix, so there are \(2^3=8\) different reflexive, symmetric relations on a three element set.

6.4.3.7.

Answer.

-

\(\begin{array}{cc} & \begin{array}{cccc} 1 & 2 & 3 & 4 \\ \end{array} \\ \begin{array}{c} 1 \\ 2 \\ 3 \\ 4 \\ \end{array} & \left( \begin{array}{cccc} 0 & 1 & 0 & 0 \\ 1 & 0 & 1 & 0 \\ 0 & 1 & 0 & 1 \\ 0 & 0 & 1 & 0 \\ \end{array} \right) \\ \end{array}\) and \(\begin{array}{cc} & \begin{array}{cccc} 1 & 2 & 3 & 4 \\ \end{array} \\ \begin{array}{c} 1 \\ 2 \\ 3 \\ 4 \\ \end{array} & \left( \begin{array}{cccc} 1 & 0 & 1 & 0 \\ 0 & 1 & 0 & 1 \\ 1 & 0 & 1 & 0 \\ 0 & 1 & 0 & 1 \\ \end{array} \right) \\ \end{array}\)

-

\(P Q= \begin{array}{cc} & \begin{array}{cccc} 1 & 2 & 3 & 4 \\ \end{array} \\ \begin{array}{c} 1 \\ 2 \\ 3 \\ 4 \\ \end{array} & \left( \begin{array}{cccc} 0 & 1 & 0 & 1 \\ 1 & 0 & 1 & 0 \\ 0 & 1 & 0 & 1 \\ 1 & 0 & 1 & 0 \\ \end{array} \right) \\ \end{array}\) \(P^2 =\text{ } \begin{array}{cc} & \begin{array}{cccc} 1 & 2 & 3 & 4 \\ \end{array} \\ \begin{array}{c} 1 \\ 2 \\ 3 \\ 4 \\ \end{array} & \left( \begin{array}{cccc} 1 & 0 & 1 & 0 \\ 0 & 1 & 0 & 1 \\ 1 & 0 & 1 & 0 \\ 0 & 1 & 0 & 1 \\ \end{array} \right) \\ \end{array}\)\(=Q^2\)

6.4.3.9.

Answer.

-

Antisymmetric: Assume \(R_{ij}\leq S_{ij}\) and \(S_{ij}\leq R_{ij}\) for all \(1\leq i,j\leq n\text{.}\) Therefore, \(R_{ij} = S_{ij}\) for all \(1\leq i,j\leq n\) and so \(R=S\)Transitive: Assume \(R, S,\) and \(T\) are matrices where \(R_{ij}\leq S_{ij}\) and \(S_{ij}\leq T_{ij}\text{,}\) for all \(1\leq i,j\leq n\text{.}\) Then \(R_{ij}\leq T_{ij}\) for all \(1\leq i,j\leq n\text{,}\) and so \(R\leq T\text{.}\)

-

\begin{equation*} \begin{split} \left(R^2\right)_{ij}&=R_{i1}R_{1j}+R_{i2}R_{2j}+\cdots +R_{in}R_{nj}\\ &\leq S_{i1}S_{1j}+S_{i2}S_{2j}+\cdots +S_{in}S_{nj}\\ &=\left(S^2\right)_{ij} \Rightarrow R^2\leq S^2 \end{split}\text{.} \end{equation*}To verify that the converse is not true we need only one example. For \(n=2\text{,}\) let \(R_{12}=1\) and all other entries equal \(0\text{,}\) and let \(S\) be the zero matrix. Since \(R^2\) and \(S^2\) are both the zero matrix, \(R^2\leq S^2\text{,}\) but since \(R_{12}>S_{12}, R\leq S\) is false.

-

The matrices are defined on the same set \(A=\left\{a_1,a_2,\ldots ,a_n\right\}\text{.}\) Let \(c\left(a_i\right), i=1,2,\ldots ,n\) be the equivalence classes defined by \(R\) and let \(d\left(a_i\right)\) be those defined by \(S\text{.}\) Claim: \(c\left(a_i\right)\subseteq d\left(a_i\right)\text{.}\)\begin{equation*} \begin{split} a_j\in c\left(a_i\right)&\Rightarrow a_i r a_j\\ &\Rightarrow R_{ij}=1 \Rightarrow S_{ij}=1\\ &\Rightarrow a_i s a_j\\ & \Rightarrow a_j \in d\left(a_i\right)\\ \end{split} \end{equation*}

6.5 Closure Operations on Relations

6.5.3 Exercises

6.5.3.3.

Answer.

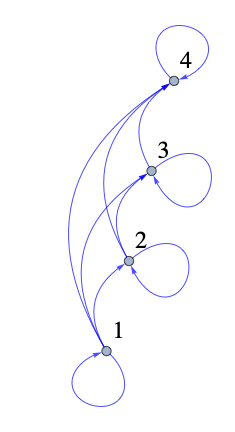

-

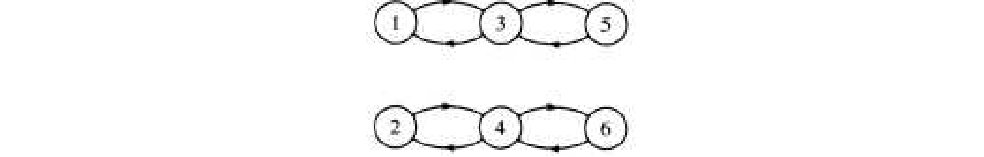

See graphs below.

6.5.3.5.

6.5.3.7.

Answer.

-

By the definition of transitive closure, \(r^+\) is the smallest relation which contains \(r\text{;}\) therefore, it is transitive. The transitive closure of \(r^+\text{,}\) \(\left(r^+\right)^+\) , is the smallest transitive relation that contains \(r^+\text{.}\) Since \(r^+\) is transitive, \(\left(r^+\right)^+=r^+\text{.}\)

-

The transitive closure of a symmetric relation is symmetric, but it may not be reflexive. If one element is not related to any elements, then the transitive closure will not relate that element to others.

7 Functions

7.1 Definition and Notation

7.1.5 Exercises

7.1.5.1.

7.1.5.3.

7.1.5.5.

7.2 Properties of Functions

7.2.3 Exercises

7.2.3.1.

7.2.3.3.

7.2.3.5.

7.2.3.7.

7.2.3.9.

Answer.

-

Use the function\(f:\mathbb{N}\to \mathbb{Z}\) defined by \(f(\text{x0}=x/2\) if \(x\) is even and \(f(x)=-(x+1)/2\) if \(x\) is odd.

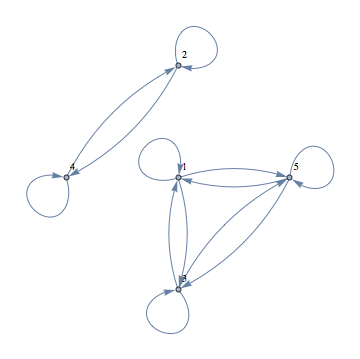

-

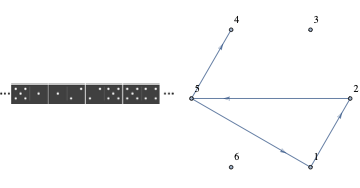

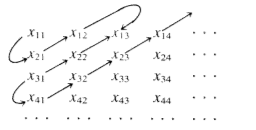

The proof is due to Georg Cantor (1845-1918), and involves listing the rationals through a definite procedure so that none are omitted and duplications are avoided. In the first row list all nonnegative rationals with denominator 1, in the second all nonnegative rationals with denominator 2, etc. In this listing, of course, there are duplications, for example, \(0/1=0/2=0\text{,}\) \(1/1=3/3=1\text{,}\) \(6/4=9/6=3/2\text{,}\) etc. To obtain a list without duplications follow the arrows in Figure 7.2.15, listing only the circled numbers.We obtain: \(0,1,1/2,2,3,1/3,1/4,2/3,3/2,4/1,\ldots\) Each nonnegative rational appears in this list exactly once. We now must insert in this list the negative rationals, and follow the same scheme to obtain:\begin{equation*} 0,1,-1,1/2,-1/2,2,-2,3,-3,1/3,-1/3, \ldots \end{equation*}which can be paired off with the elements of \(\mathbb{N}\text{.}\)

7.2.3.11.

7.2.3.13.

Answer.

The proof is indirect and follows a technique called the Cantor diagonal process. Assume to the contrary that the set is countable, then the elements can be listed: \(n_1,n_2,n_3,\ldots\) where each \(n_i\) is an infinite sequence of 0s and 1s. Consider the array:

\begin{equation*}

\begin{array}{c}

n_1=n_{11}n_{12}n_{13}\cdots\\

n_2=n_{21}n_{22}n_{23}\cdots\\

n_3=n_{31}n_{32}n_{33}\cdots\\

\quad \vdots\\

\end{array}

\end{equation*}

We assume that this array contains all infinite sequences of 0s and 1s. Consider the sequence \(s\) defined by \(s_i=\begin{cases}

0 & \textrm{ if } n_{\textrm{ii}}=1 \\

1 & \textrm{ if } n_{\textrm{ii}}=0

\end{cases}\)

Notice that \(s\) differs from each \(n_i\) in the \(i\)th position and so cannot be in the list. This is a contradiction, which completes our proof.

7.3 Function Composition

7.3.4 Exercises

7.3.4.1.

Answer.

-

\(g\circ f:A\to C\) is defined by \((g\circ f)(k)=\begin{cases} + & \textrm{ if } k=1 \textrm{ or } k=5 \\ - & \textrm{ otherwise} \end{cases}\)

-

No, since \(f\) is not surjective.

-

No, since \(g\) is not injective.

7.3.4.3.

Answer.

-

\begin{equation*} \begin{array}{ccc} g & g^{-1} & g^2 \\ i & i & i \\ r_1 & r_2 & r_2 \\ r_2 & r_1 & r_1 \\ f_1 & f_1 & i \\ f_2 & f_2 & i \\ f_3 & f_3 & i \\ \end{array} \end{equation*}

-

If \(f\) and \(g\) are permutations of \(A\text{,}\) then they are both injections and their composition, \(f\circ g\text{,}\) is a injection, by Theorem 7.3.6. By Theorem 7.3.7, \(f\circ g\) is also a surjection; therefore, \(f\circ g\) is a bijection on \(A\text{,}\) a permutation.

-

Proof by induction: Basis: \((n=1)\text{.}\) The number of permutations of \(A\) is one, the identity function, and 1! \(=1\text{.}\)Induction: Assume that the number of permutations on a set with \(n\) elements, \(n\geq 1\text{,}\) is \(n\text{!.}\) Furthermore, assume that \(|A|=\)\(\text{ }n+1\) and that \(A\) contains an element called \(\sigma\text{.}\) Let \(A'=A-\{\sigma\}\text{.}\) We can reduce the definition of a permutation, \(f\text{,}\) on \(A\) to two steps. First, we select any one of the \(n\text{!}\) permutations on \(A'\text{.}\) (Note the use of the induction hypothesis.) Call it \(g\text{.}\) This permutation almost completely defines a permutation on \(A\) that we will call \(f\text{.}\) For all \(a\) in \(A'\text{,}\) we start by defining \(f(a)\) to be \(g(a)\text{.}\) We may be making some adjustments, but define it that way for now. Next, we select the image of \(\sigma\text{,}\) which can be done \(n+1\) different ways, allowing for any value in \(A\text{.}\) To keep our function bijective, we must adjust \(f\) as follows: If we select \(f(\sigma)=y \neq \sigma\text{,}\) then we must find the element, \(z\text{,}\) of \(A\) such that \(g(z)=y\text{,}\) and redefine the image of \(z\) to \(f(z)=\sigma\text{.}\) If we had selected \(f(\sigma)=\sigma\text{,}\) then there is no adjustment needed. By the rule of products, the number of ways that we can define \(f\) is \(n!(n+1)=(n+1)!\) \(\square\)

7.3.4.5.

Answer.

-

\(f_3\) does not have an inverse. One way to verify this is to note that \(f_3\) is not one-to-one because \(f_3(0000) = 0000 = f_3(1111)\text{.}\)

7.3.4.7.

7.3.4.9.

7.3.4.11.

Answer.

Proof: Suppose that \(g\) and \(h\) are both inverses of \(f\text{,}\) both having domain \(B\) and codomain \(A\text{.}\)

\begin{equation*}

\begin{split}g &= g\circ i_B \\

& =g\circ (f\circ h)\\

& =(g\circ f)\circ h\\

& =i_A\circ h\\

& =h\quad \Rightarrow g=h \quad \square

\end{split}

\end{equation*}

7.3.4.12.

7.3.4.13.

Answer.

Let \(x,x'\) be elements of \(A\) such that \(g\circ f(x)=g\circ f(x')\text{;}\) that is, \(g(f(x))=g(f(x'))\text{.}\) Since \(g\) is injective, \(f(x)=f(x')\) and since \(f\) is injective, \(x=x'\text{.}\) \(\square\)

Let \(x\) be an element of \(C\text{.}\) We must show that there exists an element of \(A\) whose image under \(g\circ f\) is \(x\text{.}\) Since \(g\) is surjective, there exists an element of \(B\text{,}\) \(y\text{,}\) such that \(g(y)=x\text{.}\) Also, since \(f\) is a surjection, there exists an element of \(A\text{,}\) \(z\text{,}\) such that \(f(z)=y\text{,}\) \(g\circ f(z)=g(f(z))=g(y)=x\text{.}\)\(\square\)

7.3.4.15.

Answer.

Basis: \((n=2)\text{:}\) \(\left(f_1\circ f_2\right){}^{-1}=f_2{}^{-1}\circ f_1{}^{-2}\) by Exercise 7.3.4.12.

Induction: Assume \(n\geq 2\) and

\begin{equation*}

\left(f_1\circ f_2\circ \cdots \circ f_n\right){}^{-1}= f_n{}^{-1}\circ \cdots \circ f_2{}^{-1}\circ f_1{}^{-1}

\end{equation*}

and consider \(\left(f_1\circ f_2\circ \cdots \circ f_{n+1}\right)^{-1}\text{.}\)

\begin{equation*}

\begin{split}

\left(f_1\circ f_2\circ \cdots \circ f_{n+1}\right){}^{-1} &=\left(\left(f_1\circ f_2\circ \cdots \circ f_n\right)\circ f_{n+1}\right){}^{-1}\\

& =f_{n+1}{}^{-1}\circ \left(f_1\circ f_2\circ \cdots \circ f_n\right){}^{-1}\\

& \quad \quad \quad \textrm{ by the basis}\\

&=f_{n+1}{}^{-1}\circ \left(f_n{}^{-1}\circ \cdots \circ f_2{}^{-1}\circ f_1{}^{-1}\right)\\

& \quad \quad \quad \textrm{ by the induction hypothesis}\\

&=f_{n+1}{}^{-1}\circ \cdots \circ f_2{}^{-1}\circ f_1{}^{-1} \quad. \square

\end{split}

\end{equation*}

8 Recursion and Recurrence Relations

8.1 The Many Faces of Recursion

8.1.8 Exercises

8.1.8.1.

Answer.

\begin{equation*}

\begin{split}

\binom{7}{2} &=\binom{6}{2} +\binom{6}{1} \\

&=\binom{5}{2} +\binom{5}{1} +\binom{5}{1} +\binom{5}{0} \\

&=\binom{5}{2} +2 \binom{5}{1} +1\\

&=\binom{4}{2} +\binom{4}{1} +2(\binom{4}{1} +\binom{4}{0} )+1\\

&=\binom{4}{2} +3 \binom{4}{1} + 3\\

&=\binom{3}{2} +\binom{3}{1} +3(\binom{3}{1} +\binom{3}{0} )+3\\

&=\binom{3}{2} +4 \binom{3}{1} + 6\\

&=\binom{2}{2} +\binom{2}{1} + 4(\binom{2}{1} +\binom{2}{0} ) + 6\\

&=5 \binom{2}{1} + 11\\

&=5(\binom{1}{1} +\binom{1}{0} ) + 11\\

&=21

\end{split}

\end{equation*}

8.1.8.3.

8.1.8.5.

8.2 Sequences

8.2.3 Exercises

8.2.3.1.

Answer.

8.2.3.3.

Answer.

Imagine drawing line \(k\) in one of the infinite regions that it passes through. That infinite region is divided into two infinite regions by line \(k\text{.}\) As line \(k\) is drawn through every one of the \(k-1\) previous lines, you enter another region that line \(k\) divides. Therefore \(k\) regions are divided and the number of regions is increased by \(k\text{.}\) From this observation we get \(P(5)=16\text{.}\)

8.2.3.5.

8.3 Recurrence Relations

8.3.5 Exercises

8.3.5.13.

Answer.

-

The characteristic equation is \(a^2-a-1=0\text{,}\) which has solutions \(\alpha =\left.\left(1+\sqrt{5}\right)\right/2\) and \(\beta =\left.\left(1-\sqrt{5}\right)\right/2\text{,}\) It is useful to point out that \(\alpha +\beta =1\) and \(\alpha -\beta =\sqrt{5}\text{.}\) The general solution is \(F(k)=b_1\alpha ^k+b_2\beta ^k\text{.}\) Using the initial conditions, we obtain the system: \(b_1+b_2=1\) and \(b_1\alpha +b_2\beta =1\text{.}\) The solution to this system is \(b_1=\alpha /(\alpha -\beta )=\left.\left(5+\sqrt{5}\right)\right/2\sqrt{5}\) and \(b_2=\beta /(\alpha -\beta )=\left.\left(5-\sqrt{5}\right)\right/2\sqrt{5}\)Therefore the final solution is\begin{equation*} \begin{split} F(n) & = \frac{\alpha^{n+1}-\beta^{n+1}}{\alpha-\beta} \\ & = \frac{\left(\left.\left(1+\sqrt{5}\right)\right/2\right)^{n+1} -\left(\left.\left(1-\sqrt{5}\right)\right/2\right)^{n+1}}{\sqrt{5}}\\ \end{split} \end{equation*}

-

\(\displaystyle C_r=F(r+1)\)

8.3.5.15.

Answer.

-

For each two-block partition of \(\{1,2,\dots, n-1\}\text{,}\) there are two partitions we can create when we add \(n\text{,}\) but there is one additional two-block partition to count for which one block is \(\{n\}\text{.}\) Therefore, \(D(n)=2D(n-1)+1 \textrm{ for } n \geq 2 \textrm{ and } D(1)=0.\)

-

\(\displaystyle D(n)=2^{n-1}-1\)

8.4 Some Common Recurrence Relations

8.4.5 Exercises

8.4.5.1.

8.4.5.3.

8.4.5.4.

8.4.5.5.

8.4.5.7.

Answer.

-

A good approximation to the solution of this recurrence relation is based on the following observation: \(n\) is a power of a power of two; that is, \(n\) is \(2^m\text{,}\) where \(m=2^k\) , then \(Q(n)=1+Q\left(2^{m/2}\right)\text{.}\) By applying this recurrence relation \(k\) times we obtain \(Q(n)=k\text{.}\) Going back to the original form of \(n\text{,}\) \(\log _2n=2^k\) or \(\log _2\left(\log _2n\right)=k\text{.}\) We would expect that in general, \(Q(n)\) is \(\left\lfloor \log _2\left(\log _2n\right)\right\rfloor\text{.}\) We do not see any elementary method for arriving at an exact solution.

-

Suppose that \(n\) is a positive integer with \(2^{k-1} \leq n < 2^k\text{.}\) Then \(n\) can be written in binary form, \(\left(a_{k-1}a_{k-2}\cdots a_2a_1a_0\right)_{\textrm{two}}\) with \(a_{k-1}=1\) and \(R(n)\) is equal to the sum \(\underset{i=0}{\overset{k-1}{\Sigma }}\) \(\left(a_{k-1}a_{k-2}\cdots a_i\right)_{\textrm{two}}\text{.}\) If \(2^{k-1}\leq n < 2^k\text{,}\) then we can estimate this sum to be between \(2n-1\) and \(2n+1\text{.}\) Therefore, \(R(n)\approx 2n\text{.}\)

8.5 Generating Functions

8.5.7 Exercises

8.5.7.1.

8.5.7.3.

8.5.7.5.

8.5.7.7.

8.5.7.9.

9 Graph Theory

9.1 Graphs - General Introduction

9.1.5 Exercises

9.1.5.1.

Answer.

In Figure 9.1.8, computer \(b\) can communicate with all other computers. In Figure 9.1.9, there are direct roads to and from city \(b\) to all other cities.

9.1.5.3.

9.1.5.5.

9.1.5.7.

9.1.5.9.

Answer.

-

Not graphic - if the degree of a graph with seven vertices is 6, it is connected to all other vertices and so there cannot be a vertex with degree zero.

-

Graphic. One graph with this degree sequence is a cycle of length 6.

-

Not Graphic. The number of vertices with odd degree is odd, which is impossible.

-

Graphic. A "wheel graph" with one vertex connected to all other and the others connected to one another in a cycle has this degree sequence.

-

Graphic. Pairs of vertices connected only to one another.

-

Not Graphic. With two vertices having maximal degree, 5, every vertex would need to have a degree of 2 or more, so the 1 in this sequence makes it non-graphic.

9.2 Data Structures for Graphs

9.2.3 Exercises

9.2.3.1.

Answer.

-

A rough estimate of the number of vertices in the “world airline graph” would be the number of cities with population greater than or equal to 100,000. This is estimated to be around 4,100. There are many smaller cities that have airports, but some of the metropolitan areas with clusters of large cities are served by only a few airports. 4,000-5,000 is probably a good guess. As for edges, that’s a bit more difficult to estimate. It’s certainly not a complete graph. Looking at some medium sized airports such as Manchester, NH, the average number of cities that you can go to directly is in the 50-100 range. So a very rough estimate would be \(\frac{75 \cdot 4500}{2}=168,750\text{.}\) This is far less than \(4,500^2\text{,}\) so an edge list or dictionary of some kind would be more efficient.

-

The number of ASCII characters is 128. Each character would be connected to \(\binom{8}{2}=28\) others and so there are \(\frac{128 \cdot 28}{2}=3,584\) edges. Comparing this to the \(128^2=16,384\text{,}\) an array is probably the best choice.

-

The Oxford English Dictionary as approximately a half-million words, although many are obsolete. The number of edges is probably of the same order of magnitude as the number of words, so an edge list or dictionary is probably the best choice.

9.2.3.3.

9.3 Connectivity

9.3.6 Exercises

9.3.6.1.

9.3.6.3.

9.3.6.5.

Answer.

-

The eccentricity of each vertex is 2; and the diameter and radius are both 2 as well. All vertices are part of the center.

-

The corners (1,3,10 and 10) have eccentricities 5. The two central vertices, 5 and 8, which are in the center of the graph have eccentricity 3. All other vertices have eccentricity 4. The diameter is 5. The radius is 3.

-

Vertices 1, 2 and 5 have eccentricity 2 and make up the center of this graph. Verticies 7 and 8 have eccentricity 4, and all other vertices have eccentricity 3. The diameter is 4. The radius is 2.

-

The eccentricity of each vertex is 4; and the diameter and radius are both 4 as well. All vertices are part of the center.

9.3.6.7.

Answer.

Basis: \((k=1)\) Is the relation \(r^1\text{,}\) defined by \(v r^1 w\) if there is a path of length 1 from \(v \text{ to } w\text{?}\) Yes, since \(v r w\) if and only if an edge, which is a path of length \(1\text{,}\) connects \(v\) to \(w\text{.}\)

Induction: Assume that \(v r^k w\) if and only if there is a path of length \(k\) from \(v\) to \(w\text{.}\) We must show that \(v r^{k+1} w\) if and only if there is a path of length \(k+1\) from \(v\) to \(w\text{.}\)

\begin{equation*}

v r^{k+1} w \Rightarrow v r^k y \textrm{ and } y r w\textrm{ for some vertex } y

\end{equation*}

By the induction hypothesis, there is a path of length \(k\) from \(v \textrm{ to } y\text{.}\) And by the basis, there is a path of length one from \(y\) to \(w\text{.}\) If we combine these two paths, we obtain a path of length \(k+1\) from \(v\) to \(w\text{.}\) Of course, if we start with a path of length \(k+1\) from \(v\) to \(w\text{,}\) we have a path of length \(k\) from \(v\) to some vertex \(y\) and a path of length 1 from \(y\) to \(w\text{.}\) Therefore, \(v r^k y \textrm{ and } y r w \Rightarrow v r^{k+1} w\text{.}\)

9.3.6.9.

Answer.

Let \(v\) and \(w\) be any two vertices in the graph and let \(z\) be any vertex in the center of the graph. The distances from \(z\) to both \(v\) and \(w\) must bot be less than or equal to \(r\text{,}\) therefore, a path from \(v\) to \(w\) through \(z\) must exist and have length less than or equal to \(2r\text{.}\) Therefore \(2r\) serves as an upper bound of the diameter.

9.4 Traversals: Eulerian and Hamiltonian Graphs

9.4.3 Exercises

9.4.3.1.

Answer.

Using a recent road map, it appears that an Eulerian circuit exists in New York City, not including the small islands that belong to the city. Lowell, Massachusetts, is located at the confluence of the Merrimack and Concord rivers and has several canals flowing through it. No Eulerian path exists for Lowell.

9.4.3.3.

9.4.3.5.

9.4.3.7.

Answer.

Let \(G=(V,E)\) be a directed graph. \(G\) has an Eulerian circuit if and only if \(G\) is connected and \(indeg(v)= outdeg(v)\) for all \(v \in V\text{.}\) There exists an Eulerian path from \(v_1 \textrm{ to } v_2\) if and only if \(G\) is connected, \(indeg(v_1)=outdeg(v_1)-1\text{,}\) \(indeg(v_2)= outdeg(v_2)+1\text{,}\) and for all other vertices in \(V\) the indegree and outdegree are equal.

9.4.3.8.

9.4.3.9.

9.4.3.11.

Solution.

No, such a line does not exist. The dominoes with two different numbers correspond with edges in a \(K_6\text{.}\) See corresponding dominos and edges in Figure 9.4.25. Dominos with two equal numbers could be held back and inserted into the line created with the other dominoes if such a line exists. For example, if \((2,5),(5,4)\) were part of the line, \((5,5)\) could be inserted between those two dominoes. The line we want exists if and only if there exists an Eulerian path in a \(K_6\text{.}\) Since all six vertices of a \(K_6\) have odd degree no such path exists.

Four dominos lay end-to-end with numbers on abutting ends matching. They correspond with four connecting edges in a \(K_6\text{.}\)

9.5 Graph Optimization

9.5.5 Exercises

9.5.5.1.

9.5.5.3.

Answer.

-

Optimal cost \(=2\sqrt{2}\approx 2.82843\text{,}\) which is attained with the nearest neighbor algorithm. Strip algorithm phase 1 cost \(=3.39411\text{.}\) Strip algorithm phase 2 cost \(=3.67696\text{.}\)

-

Optimal cost \(=2.62266\text{,}\) which is attained with the nearest neighbor algorithm. Strip algorithm phase 1 cost is \(=3.00007\text{.}\) Strip algorithm phase 2 cost \(3.07119\text{.}\)

-

\(A=(0.0, 0.5), B=(0.5, 0.0), C=(0.5, 1.0), D=(1.0, 0.5)\)There are 4 points; so we will divide the unit square into two strips.

-

Optimal Path: \((B,A,C,D)\quad \quad \text{Distance } =2\sqrt{2}\)

-

Phase I Path: \((B,A,C,D)\quad \quad \text{Distance }=2\sqrt{2}\)

-

Phase II Path: \((A,C,B,D) \quad \quad\textrm{Distance }=2+\sqrt{2}\)

-

-

\(A=(0,0), B=(0.2,0.6), C=(0.4,0.1), D=(0.6,0.8), E=(0.7,0.5)\)There are 5 points; so we will divide the unit square into three strips.

-

Optimal Path: \((A,B,D,E,C)\quad \quad \text{Distance }=2.30821\)

-

Phase I Path: \((A,C,B,C,E)\quad \quad \text{Distance }=2.5745\)

-

Phase II Path: \((A,B,D,E,C) \quad \quad\textrm{Distance }=2.30821\)

-

9.5.5.5.

Answer.

-

\(f(c,d)=2\text{,}\) \(f(b,d)=2\text{,}\) \(f(d,k)=5\text{,}\) \(f(a,g)=1\text{,}\) and \(f(g,k)=1\text{.}\)

-

There are three possible flow-augmenting paths. \(s,b,d,k\) with flow increase of 1. \(s,a,d,k\) with flow increase of 1, and \(s,a,g,k\) with flow increase of 2.

-

The new flow is never maximal, since another flow-augmenting path will always exist. For example, if \(s,b,d,k\) is used above, the new flow can be augmented by 2 units with \(s,a,g,k\text{.}\)

9.5.5.7.

Answer.

-

Value of maximal flow \(=31\text{.}\)

-

Value of maximal flow \(=14\text{.}\)

| Step | Flow-augmenting path | Flow added |

| 1 | \(\text{Source},A,\text{Sink}\) | 2 |

| 2 | \(\text{Source}, C,B, \text{Sink}\) | 3 |

| 3 | \(\text{Source},E,D, \text{Sink}\) | 4 |

| 4 | \(\text{Source},A,B,\text{Sink}\) | 1 |

| 5 | \(\text{Source},C,D, \text{Sink}\) | 2 |

| 6 | \(\text{Source},A,B,C,D, \text{Sink}\) | 2 |

9.5.5.9.

Answer.

To locate the closest neighbor among the list of \(k\) other points on the unit square requires a time proportional to \(k\text{.}\) Therefore the time required for the closest-neighbor algorithm with \(n\) points is proportional to \((n-1)+(n-2)+\cdots +2+1\text{,}\) which is proportional to \(n^2\text{.}\) Since the strip algorithm takes a time proportional to \(n(\log n)\text{,}\) it is much faster for large values of \(n\text{.}\)

9.6 Planarity and Colorings

9.6.3 Exercises

9.6.3.1.

Answer.

A \(K_5\) has 10 edges. If a \(K_5\) is planar, the number of regions into which the plane is divided must be 7, by Euler’s formala (\(5+7-10=2\)). If we re-count the edges of the graph by counting the number edges bordering the regions we get a count of at least \(7 \times 3=21\text{.}\) But we’ve counted each edge twice this way and the count must be even. This implies that the number of edges is at least 11, which a contradiction.

9.6.3.2.

Hint.

Don’t forget Theorem 9.6.21!

9.6.3.3.

9.6.3.5.

9.6.3.7.

Answer.

Suppose that \(G'\) is not connected. Then \(G'\) is made up of 2 components that are planar graphs with less than \(k\) edges, \(G_1\) and \(G_2\text{.}\) For \(i=1,2\) let \(v_i,r_i, \text{and} e_i\) be the number of vertices, regions and edges in \(G_i\text{.}\) By the induction hypothesis, \(v_i+r_i-e_i=2\) for \(i=1,2\text{.}\)

One of the regions, the infinite one, is common to both graphs. Therefore, when we add edge \(e\) back to the graph, we have \(r=r_1+r_2-1\text{,}\) \(v=v_1+v_2\text{,}\) and \(e=e_1+e_2+1\text{.}\)

\begin{equation*}

\begin{split}

v+r-e &=\left(v_1+v_2\right)+\left(r_1+r_2-1\right)-\left(e_1+e_2+1\right)\\

&=\left(v_1+r_1-e_1\right)+\left(v_2+r_2-e_2\right)-2\\

&=2 + 2 -2\\

&=2

\end{split}

\end{equation*}

9.6.3.9.

Answer.

Since \(\left| E\right| +\left| E^c \right|=\frac{n(n-1)}{2}\text{,}\) either \(E \text{ or } E^c\) has at least \(\frac{n(n-1)}{4}\) elements. Assume that it is \(E\) that is larger. Since \(\frac{n(n-1)}{4}\) is greater than \(3n-6\text{ }\text{for}\text{ }n\geqslant 11\text{,}\) \(G\) would be nonplanar. Of course, if \(E^c\) is larger, then \(G'\) would be nonplanar by the same reasoning. Can you find a graph with ten vertices such that it is planar and its complement is also planar?

9.6.3.11.

Answer.

Suppose that \((V,E)\) is bipartite (with colors red and blue), \(\left| E\right|\) is odd, and \(\left(v_1,v_2,\ldots ,v_{2n+1},v_1\right)\) is a Hamiltonian circuit. If \(v_1\) is red, then \(v_{2n+1}\) would also be red. But then \(\left\{v_{2n+1},v_1\right\}\) would not be in \(E\text{,}\) a contradiction.

9.6.3.13.

9.6.3.15.

Solution.

-

The chromatic number will always be two. One coloring of any of these graphs would be to color all vertices whose coordinate add up to an even integer one color and the other vertices whose coordinates have an odd sum some other color. This works because for any vertex, if the sum of coordinate is even, the adjacent vertices differ in exactly one coordinate by \(\pm 1\) and so they have a coordinate sum that is odd.

-

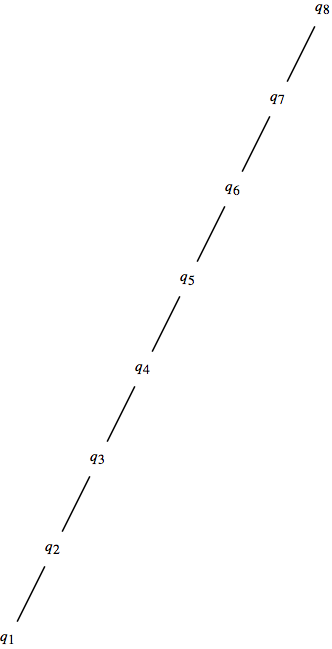

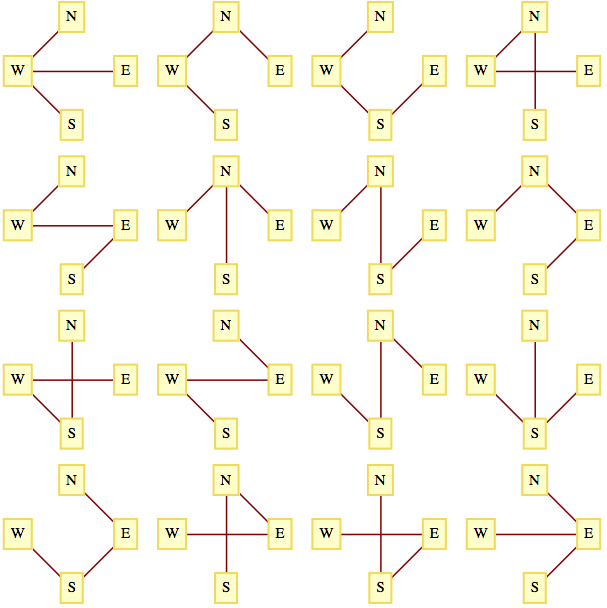

If both \(a_1\) and \(a_2\) are odd, then \(M(a_1, a_2)\) does not have a Hamiltonian circuit. To see why, we can color vertices with an even coordinate sum white and the ones with a odd sum black. If \(a_1 =2k_1+1\) and \(a_2=2 k_2 + 1\text{,}\) then there are \(N=4k_1 k_2 + 2k_1+2k_2 + 1\) vertices. There will be \(k_1 k_2 + k_1+k_2 + 1\) white vertices and one fewer black one. Any circuit that starts and ends at any vertex must have an even number of vertices if we count the beginning/ending vertex once. This implies that a Hamiltonian circuit that includes all vertices cannot exist.If either of the \(a_i\) are even, \(M(a_1, a_2)\) does have a Hamiltonian circuit. There are many different possible circuits, but one of them, assuming \(a_1\) is even, would be to start at \((1,1)\text{,}\) traverse the left border, the top border and the right border, leaving you at \((a_1,1)\text{.}\) then you can zig-zag back to \((1,1)\) visiting all of the vertices in the interior of the graph and the bottom border. This is is illustrated in the following graph of \(M(4,3)\)

A Hamiltonian circuit of \(M(4,3)\) as described in the text.

A Hamiltonian circuit of \(M(4,3)\) as described in the text. -

As in the two diminsional case there will be a Hamiltonian circuit if at least one of the \(a_i\) are even.

10 Trees

10.1 What Is a Tree?

10.1.3 Exercises

10.1.3.1.

10.1.3.3.

10.1.3.5.

Solution.

-

Assume that \((V,E)\) is a tree with \(\left| V\right| \geq 2\text{,}\) and all but possibly one vertex in \(V\) has degree two or more.\begin{equation*} \begin{split} 2\lvert E \rvert =\sum_{v \in V}{\deg(v)} \geq 2 \lvert V \rvert -1 &\Rightarrow \lvert E\vert \geq \lvert V\rvert -\frac{1}{2}\\ &\Rightarrow \lvert E\rvert \geq \lvert V\rvert\\ & \Rightarrow (V,E) \textrm{ is not a tree.} \end{split} \end{equation*}

-

The proof of this part is similar to part (a). If we assume that there are less than three vertices of degree three, we can still infer \(2\lvert E\rvert \geq 2 \lvert V\rvert -1\) using the fact that a non-chain tree has at least one vertex of degree three or more.

10.1.3.7.

Solution.

We can prove this by induction for trees with \(n\) vertices, \(n \geq 2\text{.}\) The basis is clearly true since the only tree with two vertices is a \(K_2\text{.}\) Now assume that (✶) is true for some \(n\) greater than or equal to two and consider a tree \(T\) with \(n+1\) vertices. We proceed by removing a leaf from \(T\text{.}\) If there exists a leaf connected to a vertex of degree two, we select one such leaf. The resulting tree satisfies (✶) for the number of leaves; and adding the removed leaf neither changes the number of leaves nor the value of (✶). If all interior vertices have degree three or more remove any leaf from \(T\text{.}\) Again, the number of leaves in the resulting tree is (✶), but this time when we put the removed leaf back on the tree the number of leaves will increase by one, but the value of (✶) will increase by one to match it

10.2 Spanning Trees

10.2.4 Exercises

10.2.4.1.

10.2.4.3.

Solution.

-

There are three minimal spanning tree, with edges \(\{0,5\},\{0,3\},\{4,5\}\) and any two of the following edges \(\{0,1\},\{0,2\},\{1,2\}.\)

-

There is only one minimal spanning tree. If we start with BOS as the “right set”, the edges in the following set are ordered according to how they are added in Prim’s Algorithm: \(\{\{BOS,NY\},\{NY,PHI\},\{PHI,DC\},\{DC,ATL\},\\ \{PHI,KC\},\{KC,CHI\},\{KC,LA\},\{LA,SF\}\}.\)

-

There is only one minimal spanning tree, which has edges \(\{\{1,8\},\{2,8\},\{2,7\},\{3,7\},\{3,6\},\{4,6\},\{4,5\}\}\)

10.2.4.5.

Solution.

10.2.4.7.

Solution.

-