1.

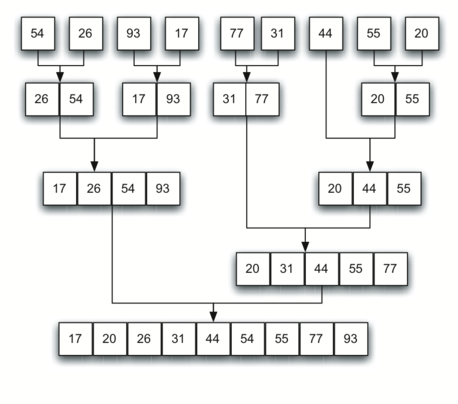

Given the following list of numbers:

[21, 1, 26, 45, 29, 28, 2, 9, 16, 49, 39, 27, 43, 34, 46, 40]

>which answer illustrates the list to be sorted after 3 recursive calls to mergeSort?

- [16, 49, 39, 27, 43, 34, 46, 40]

- This is the second half of the list.

- [21,1]

- Yes, mergesort will continue to recursively move toward the beginning of the list until it hits a base case.

- [21, 1, 26, 45]

- Remember mergesort doesn’t work on the right half of the list until the left half is completely sorted.

- [21]

- This is the list after 4 recursive calls